This is the blog section. It has two categories: News and Releases.

Files in these directories will be listed in reverse chronological order.

This is the multi-page printable view of this section. Click here to print.

This is the blog section. It has two categories: News and Releases.

Files in these directories will be listed in reverse chronological order.

All the Ksctl announcements

We are thrilled to announce the release of Ksctl v2.6! This significant update introduces Advanced Customization for Cilium and Flannel CNI, empowering you to tailor your Kubernetes clusters’ networking to match your specific requirements and operational environment.

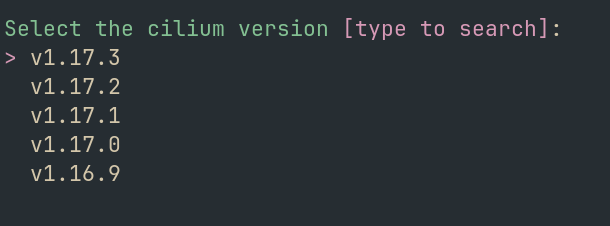

Version Selection

With the new Cilium customization options, you can precisely configure your Kubernetes network environment with granular control over security policies, load balancing, and network connectivity.

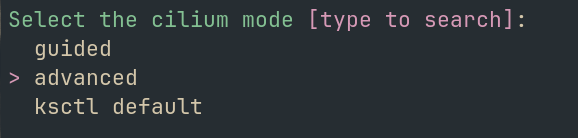

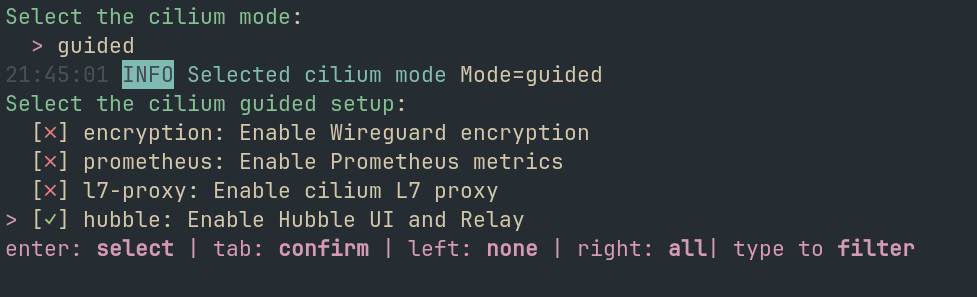

Choose from three flexible modes of customization:

Default Mode: Pre-configured setup with best practices implemented for immediate deployment

Advanced Mode: Maximum flexibility for networking experts

values.yml filesGuided Mode: User-friendly interface with component-level control

For comprehensive documentation on Cilium configuration options, visit our Cilium Documentation.

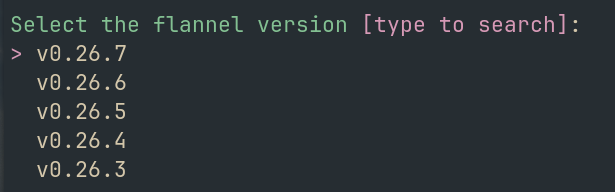

Flannel, known for its simplicity and reliability, now also offers similar customization capabilities:

Version Selection

For detailed instructions on Flannel configuration, see our Flannel Documentation.

Note: These customization features work with the

ksctl cluster createcommand when specifying your CNI preference.

To upgrade your Ksctl CLI to the latest version, simply run:

ksctl self-update

This ensures you’re using the latest version with all the new features and improvements. 🚀

With Ksctl v2.6, managing Kubernetes networking has never been more flexible and powerful! Upgrade today to take advantage of these advanced CNI customization options.

We are thrilled to announce the release of Ksctl v2.7! This significant update introduces Cluster Summary, empowering you to gain Both Debugging Information and Security Recommendations for your Kubernetes clusters.

This New ogging system is designed to provide most minimlistic and easy to read logs. It will help you to debug your cluster in a single command.

The new cluster summary and recommendation feature provides you with a comprehensive overview of your cluster’s configuration and offers both debugging information and security recommendations.

In our latest release, Ksctl v2.7 introduces a powerful feature that provides a comprehensive summary of your cluster’s configuration. This feature is designed to enhance both debugging and security recommendations. With a single command, $ ksctl cluster summary, users can access critical details about their cluster.

This includes insights into pod errors, node metrics, cluster events, and latency reports.

Additionally, the feature offers recommendations for improving cluster security by checking for issues like PodSecurityPolicy, NamespaceQuota, PodResourceLimits & Requests, and NodePort configurations.

This tool is invaluable for users looking to optimize their cluster’s performance and security effortlessly.

For detailed instructions on this, Head over to Cluster Summary Documentation.

To upgrade your Ksctl CLI to the latest version, simply run:

ksctl self-update

This ensures you’re using the latest version with all the new features and improvements. 🚀

With Ksctl v2.7, managing Kubernetes networking has never been more flexible and powerful! Upgrade today to take advantage of these advance features.

We are thrilled to announce the release of Ksctl v2.5! This update introduces Smart Card Selection, an intelligent feature that helps you select the best region and instance type for your Kubernetes clusters based on your requirements.

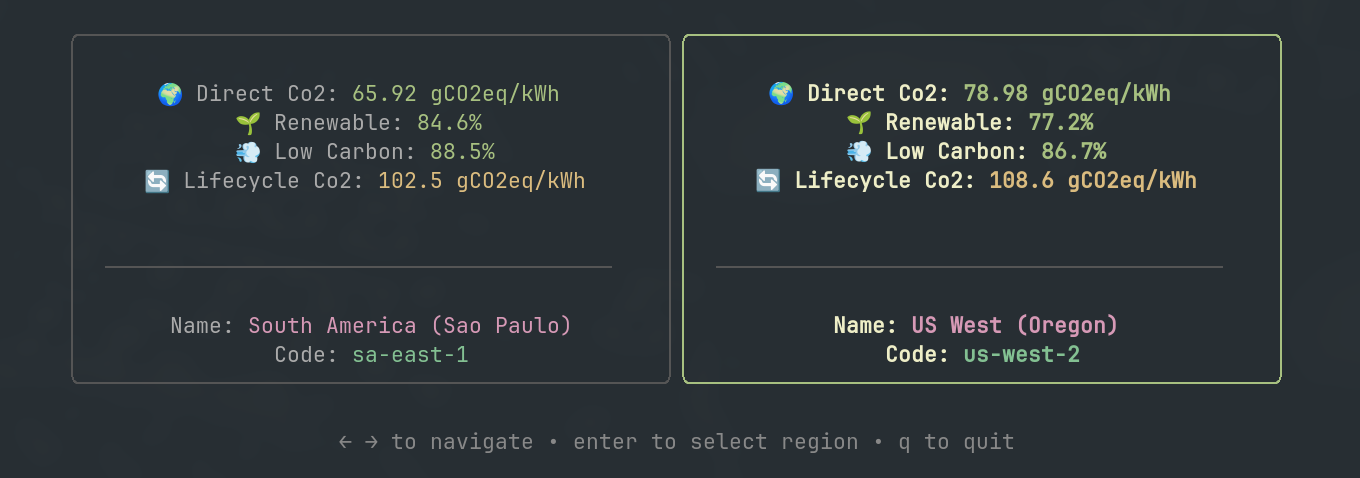

Ksctl can help you choose the most efficient region based on emission metrics, ensuring that your Kubernetes clusters are not only cost-effective but also environmentally friendly.

This intelligent selection ensures your Kubernetes clusters are deployed in regions that align with both cost-efficiency and sustainability goals.

Figure: Visualization of region selection optimization based on emission metrics.

Figure: Visualization of region selection optimization based on emission metrics.

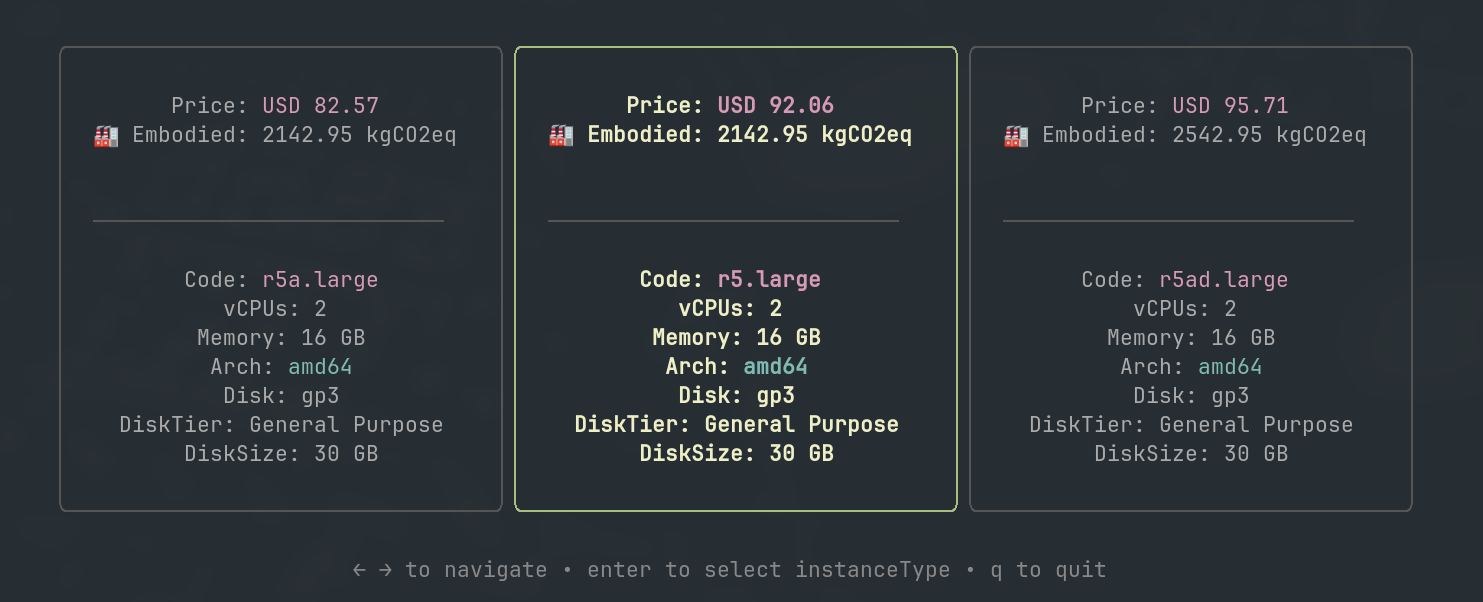

Ksctl can help you choose the most efficient instance type based on cost and embodied emissions, ensuring that your Kubernetes clusters are not only cost-effective but also environmentally friendly.

This ensures your Kubernetes clusters are optimized for both cost-efficiency and environmental sustainability.

Figure: Visualization of instance type selection optimization based on cost and embodied emissions.

Figure: Visualization of instance type selection optimization based on cost and embodied emissions.

This feature ensures you get maximum cost efficiency and minimum emissions while maintaining performance and reliability. This feature works with the

ksctl cluster createcommand.

To upgrade your Ksctl CLI to the latest version, simply run:

ksctl self-update

This ensures you’re using the latest version with all the new features and improvements. 🚀

With Ksctl v2.5, managing Kubernetes just got more efficient and cost-effective! Upgrade today and take advantage of smart region switching to optimize your cloud expenses.

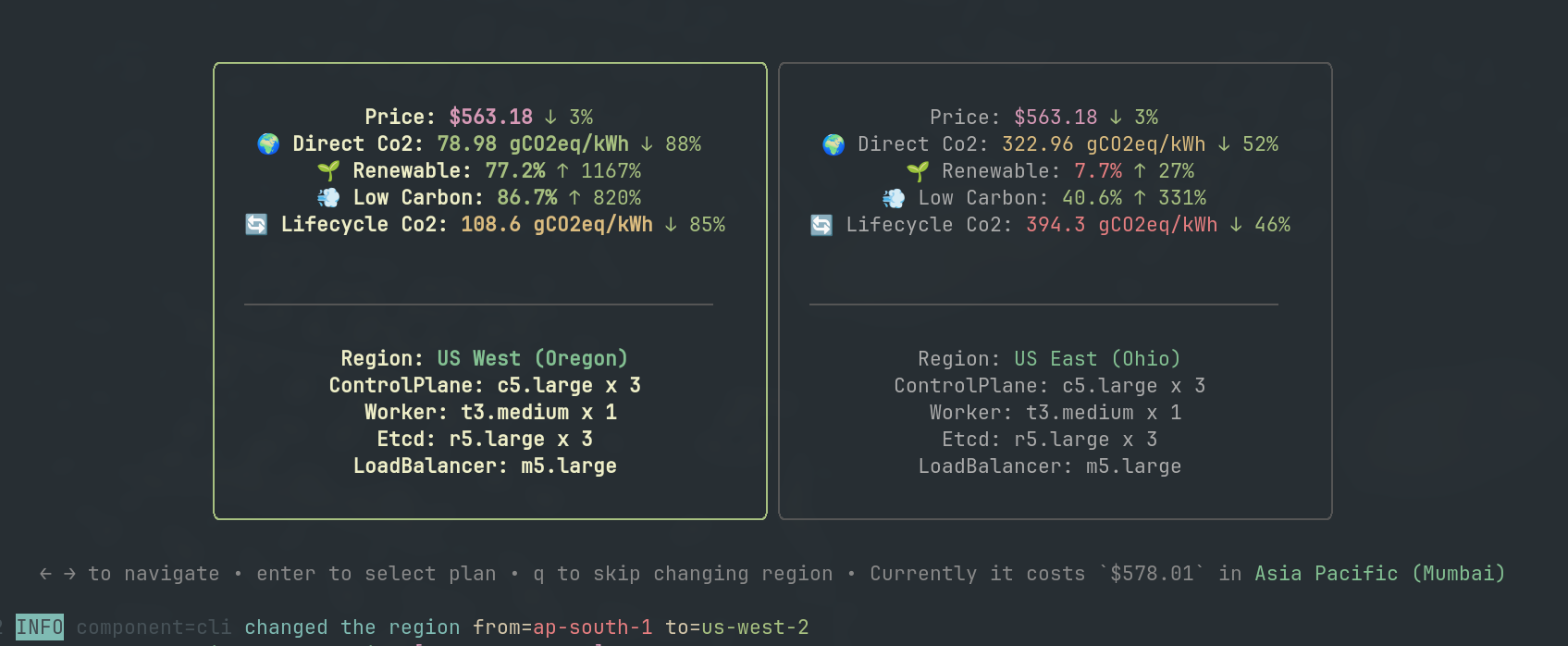

We are thrilled to announce the release of Ksctl v2.4! This update introduces Dynamic Region Switching, an intelligent cost and emission optimization feature that helps you minimize infrastructure expenses by selecting the most efficient region for your Kubernetes clusters—without changing your instance type.

Ksctl can now switch regions to optimize costs while maintaining the same instance type. This feature ensures that your clusters run in both the most cost-effective and least emission region without manual intervention.

This feature ensures you get maximum cost efficiency and minimum emissions while maintaining performance and reliability.

This feature works with the

ksctl cluster createcommand.

To upgrade your Ksctl CLI to the latest version, simply run:

ksctl self-update

This ensures you’re using the latest version with all the new features and improvements. 🚀

With Ksctl v2.4, managing Kubernetes just got more efficient and cost-effective! Upgrade today and take advantage of smart region switching to optimize your cloud expenses.

We are thrilled to announce the release of Ksctl v2.3! This update introduces Dynamic Region Switching, an intelligent cost-optimization feature that helps you minimize infrastructure expenses by selecting the most cost-effective region for your Kubernetes clusters—without changing your instance type.

Ksctl can now automatically switch regions to optimize costs while maintaining the same instance type. This feature ensures that your clusters run in the most cost-effective region without manual intervention.

This feature ensures you get maximum cost efficiency while maintaining performance and reliability.

This Feature work with the

ksctl cluster createcommand.

To upgrade your Ksctl CLI to the latest version, simply run:

ksctl self-update

This ensures you’re using the latest version with all the new features and improvements. 🚀

With Ksctl v2.3, managing Kubernetes just got more efficient and cost-effective! Upgrade today and take advantage of smart region switching to optimize your cloud expenses.

We are excited to announce the release of Ksctl v2.2.1! This release brings a host of bugfixes and new features to transform your ksctl experience.

No need to know which instancetype falls under which category. Just select the category and ksctl will take care of the rest.

Here is the new categorization of instance types wrt to the cluster type you have selected:

All these will help increase the performance and also reduce the cost of the cluster.

| Category | Amazon Web Services | Microsoft Azure |

|---|---|---|

| cpu-intensive | c5 | F-series |

| memory-intensive | r5 | E-series |

| general-purpose | m5 | D-series |

| burstable | t3 | B-series |

it has new security fixes and bug fixes

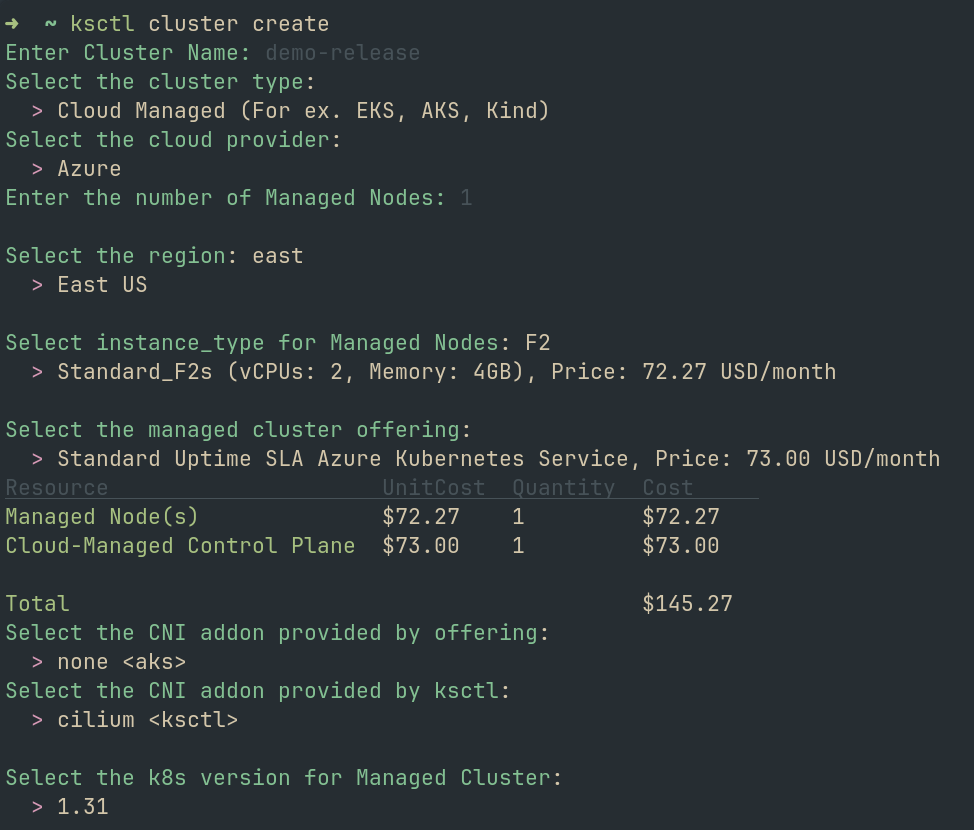

We’re thrilled to announce the release of Ksctl v2! This major update is designed to simplify your Kubernetes journey—whether you’re a seasoned operator or just starting out. With v2, we’ve reimagined the way you interact with your clusters, taking away the pain of endless command-line flags and help lookups, and putting a smooth, intuitive experience at your fingertips.

If you’ve ever found yourself repeatedly typing ksctl create --help just to recall the right flag, you’re not alone. Ksctl v2 was born out of our commitment to make Kubernetes cluster provisioning as frictionless as possible. Here’s what we aimed to solve with this release:

This release brings a host of improvements and new features to transform your Kubernetes management experience:

Redesign of Ksctl core:

provisionerandcluster managementcomponents

| v2.0.0 | v1.3.1 |

|---|---|

|  |

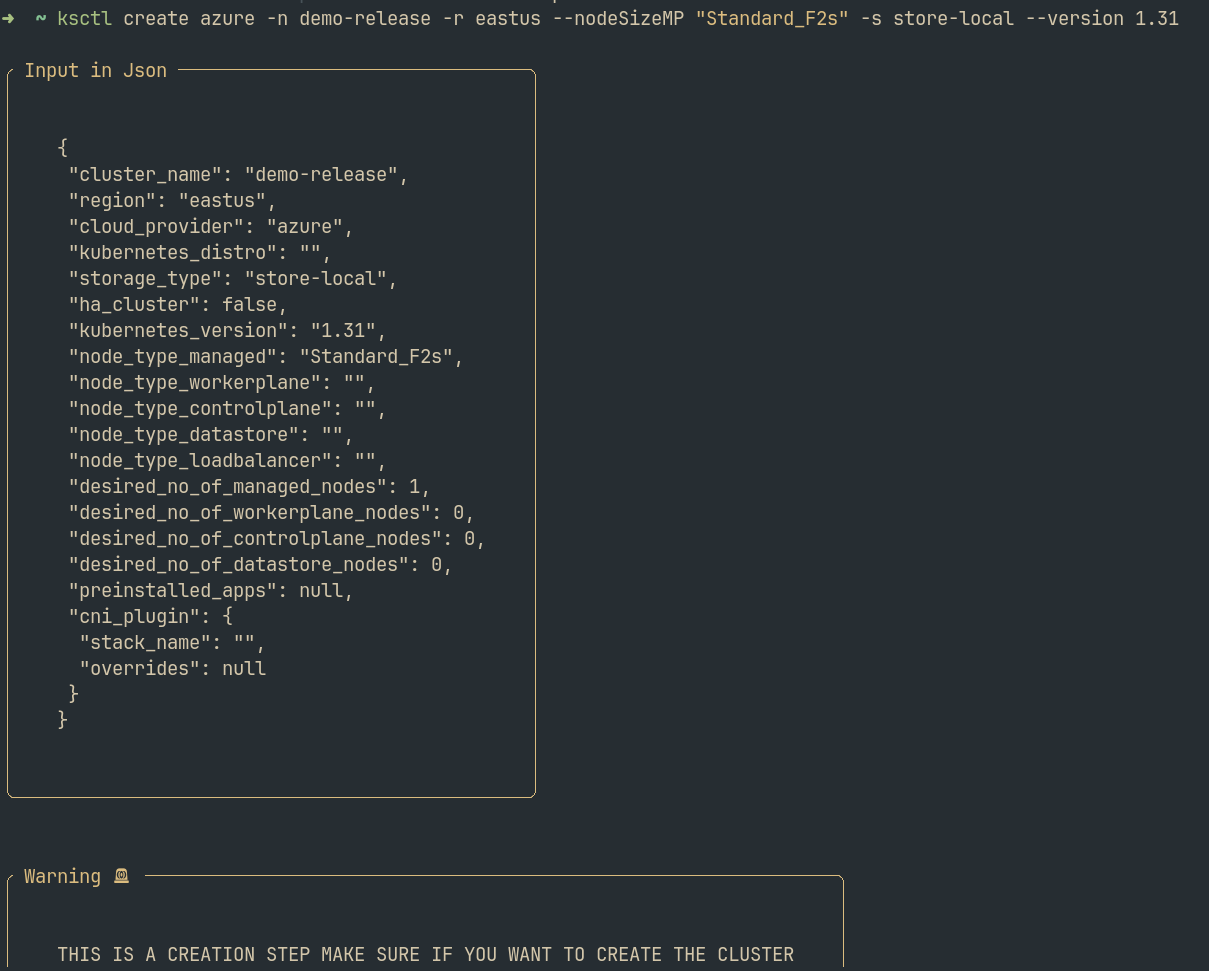

Before you need to remember what exactly is the vm_type, region sku, version, .. etc. Now you just need to specify the cloud provider and the rest will be taken care by ksctl. just choosing from the select options, It also shows the cost of instance_type with the block storage. A great step towards making the tool more user-friendly. 🎉

$ ksctl configure ... command.ha keyword is replaced with selfmanaged to clearly indicate the type of cluster you’re operating.Improved State Document: We’ve migrated versioning for components like Aks, Eks, Kind, HAProxy, and Etcd to a new field, ensuring better consistency and smoother migrations.

New Addons System: Customize your cluster with our new addons framework. Simply specify the addons you want (e.g., switching CNI from “none” to “cilium”) in your configuration, and let Ksctl handle the rest.

Here’s a snippet of under-the-hood configuration on how ksctl helps in creating Cilium 🐝 enabled cluster:

{

"cluster_name": "test-e2e-local-cilium",

"kubernetes_version": "1.32.0",

"cloud_provider": "local",

"storage_type": "store-local",

"cluster_type": "managed",

"desired_no_of_managed_nodes": 2,

"addons": [

{

"label": "kind",

"name": "none",

"cni": true

},

{

"label": "ksctl",

"name": "cilium",

"cni": true

}

]

}

$ ksctl cluster connect, your kubeconfig is automatically merged into ~/.kube/config and the current context is set accordingly.With this release, we’re making significant changes that break backward compatibility:

github.com/ksctl/ksctl/v2.civo cloud provider has been completely removed.Please ensure you update your references and workflows accordingly to take full advantage of Ksctl v2’s capabilities.

Ksctl v2 is more than just an update—it’s a rethinking of the Kubernetes management experience. Our goal is to empower Developers with a tool that handles the complexity behind the scenes, letting you focus on deploying and managing clusters with ease and help you all create amazing software. Whether you’re provisioning your first cluster or managing a fleet of production environments, Ksctl v2 is here to streamline your operations.

Ready to dive in? Check out the full changelog and get started with Ksctl v2 today!

Happy clustering!

The Ksctl Team

Ksctl documentation

https://cloud.google.com/kubernetes-engine/docs/concepts/horizontalpodautoscaler

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale

This blog might help The Guide To Kubernetes Cluster Autoscaler by Example