Ksctl documentation

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Architecture

- 1.1: Api Components

- 2: Getting Started

- 3: Cloud Provider

- 3.1: Amazon Web Services

- 3.2: Azure

- 3.3: Kind

- 4: Reference

- 4.1: ksctl

- 4.2: ksctl_cluster

- 4.3: ksctl_cluster_addons

- 4.4: ksctl_cluster_addons_disable

- 4.5: ksctl_cluster_addons_enable

- 4.6: ksctl_cluster_connect

- 4.7: ksctl_cluster_create

- 4.8: ksctl_cluster_delete

- 4.9: ksctl_cluster_get

- 4.10: ksctl_cluster_list

- 4.11: ksctl_cluster_scaledown

- 4.12: ksctl_cluster_scaleup

- 4.13: ksctl_cluster_summary

- 4.14: ksctl_completion

- 4.15: ksctl_configure

- 4.16: ksctl_configure_cloud

- 4.17: ksctl_configure_storage

- 4.18: ksctl_configure_telemetry

- 4.19: ksctl_self-update

- 4.20: ksctl_version

- 5: Contribution Guidelines

- 5.1: Contribution Guidelines for CLI

- 5.2: Contribution Guidelines for Core

- 5.3: Contribution Guidelines for Docs

- 6: Concepts

- 6.1: Cluster Summary and Recommendation

- 6.2: Smart Cost & Emission Optimization

- 6.3: Cloud Controller

- 6.4: Core functionalities

- 6.5: Core Manager

- 6.6: Distribution Controller

- 7: Container Network Interface (CNI)

- 8: Contributors

- 9: Faq

- 10: Features

- 11: Ksctl Cluster Management

- 11.1: Ksctl Stack

- 12: Kubernetes Distributions

- 13: Roadmap

- 14: Search Results

- 15: Storage

- 15.1: External Storage

- 15.2: Local Storage

1 - Architecture

Architecture diagrams

1.1 - Api Components

Core Design Components

Design

Overview architecture of ksctl

Managed Cluster creation & deletion

Self-Managed Cluster creation & deletion

Architecture change to event based for much more capabilities

Note:

Currently This is WIP

2 - Getting Started

Getting Started Documentation

Installation & Uninstallation Instructions

Ksctl CLI

Lets begin with installation of the tools their are various method

Single command method

Install

Steps to Install Ksctl cli toolcurl -sfL https://get.ksctl.com | python3 -

Uninstall

Steps to Uninstall Ksctl cli toolbash <(curl -s https://raw.githubusercontent.com/ksctl/cli/main/scripts/uninstall.sh)

zsh <(curl -s https://raw.githubusercontent.com/ksctl/cli/main/scripts/uninstall.sh)

From Source Code

Caution!

Under-Development binariesNote

The Binaries to testing ksctl cli is available in ksctl/cli repomake install_linux

# macOS on M1

make install_macos

# macOS on INTEL

make install_macos_intel

# For uninstalling

make uninstall

Configure Ksctl CLI

Configure

Steps to Configure Ksctl cli toolksctl configure cloud # To configure cloud

ksctl configure storage # To configure storage

How to start with cli

Here is the CLI references3 - Cloud Provider

This Page includes more info about different cloud providers

3.1 - Amazon Web Services

AWS integration for Self-Managed and Managed Kubernetes Clusters

Caution

AWS credentials are required to access clusters. These credentials are sensitive information and must be kept secure.Authentication Methods

Command Line Interface

Use the ksctl credential manager:

ksctl configure cloud

Available Cluster Types

Self-Managed Clusters

Self-managed clusters with the following components:

- Distributed etcd database instances

- HAProxy load balancer for control plane high availability

- Multiple control plane nodes

- Worker nodes

Choose between two bootstrap options:

- k3s (lightweight Kubernetes distribution)

- kubeadm (official Kubernetes bootstrap tool)

Amazon EKS (Managed Clusters)

Elastic Kubernetes Service deployment with automated:

- IAM role creation and management

- Control plane setup

- Node group configuration

IAM Configuration

For each cluster, ksctl creates two roles:

ksctl-<clustername>-wp-role: Manages node pool permissionsksctl-<clustername>-cp-role: Handles control plane access

Required IAM Policies

- Custom IAM Role Access Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor6",

"Effect": "Allow",

"Action": [

"iam:CreateInstanceProfile",

"iam:DeleteInstanceProfile",

"iam:GetRole",

"iam:GetInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:CreateRole",

"iam:DeleteRole",

"iam:AttachRolePolicy",

"iam:PutRolePolicy",

"iam:ListInstanceProfiles",

"iam:AddRoleToInstanceProfile",

"iam:ListInstanceProfilesForRole",

"iam:PassRole",

"iam:CreateServiceLinkedRole",

"iam:DetachRolePolicy",

"iam:DeleteRolePolicy",

"iam:DeleteServiceLinkedRole",

"iam:GetRolePolicy",

"iam:SetSecurityTokenServicePreferences"

],

"Resource": [

"arn:aws:iam::*:role/ksctl-*",

"arn:aws:iam::*:instance-profile/*"

]

}

]

}

- Custom EKS Access Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"eks:ListNodegroups",

"eks:ListClusters",

"eks:*"

],

"Resource": "*"

}

]

}

- AWS Managed Policies Required

- AmazonEC2FullAccess

- IAMReadOnlyAccess

Kubeconfig Authentication

After switching to an AWS cluster using:

ksctl switch aws --name here-you-go --region us-east-1

The generated kubeconfig uses AWS STS tokens which expire after 15 minutes. When you encounter authentication errors, simply run the switch command again to refresh the credentials.

Looking for CLI Commands?

All CLI commands mentioned in this documentation have detailed explanations in our command reference guide.

CLI Reference

👉 Check out our comprehensive CLI Commands Reference for:

- Detailed command syntax

- Usage examples

- Available options and flags

- Common use cases

3.2 - Azure

Azure support for Self-Managed and Managed Kubernetes Clusters

Caution

Azure credentials are required to access clusters. These credentials are sensitive information and must be kept secure.Azure Credential Requirements

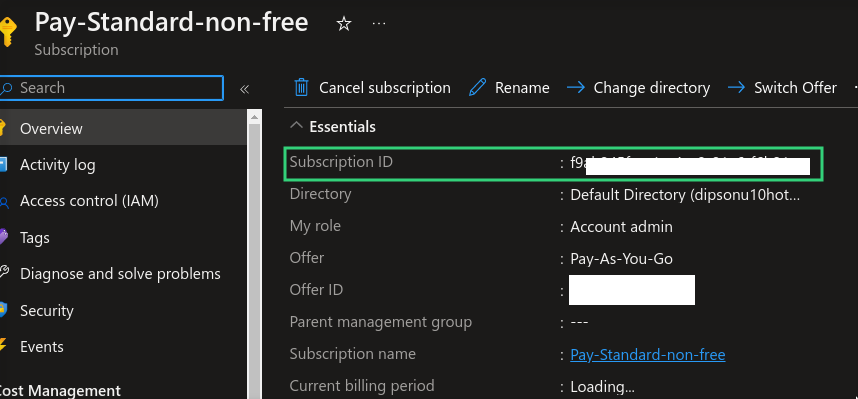

Subscription ID

Your Azure subscription identifier can be found in your subscription details.

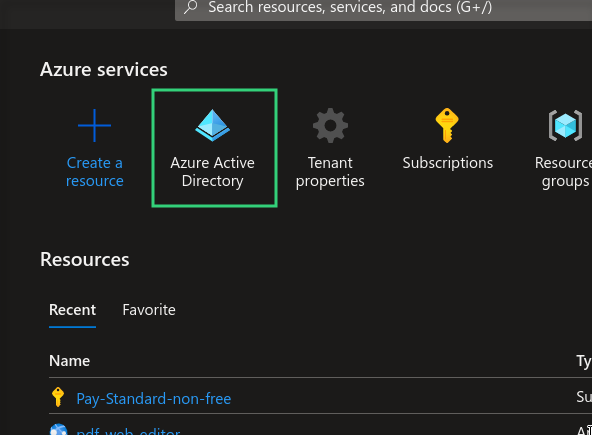

Tenant ID

Located in the Azure Dashboard, which provides access to all required credentials.

To locate your Tenant ID:

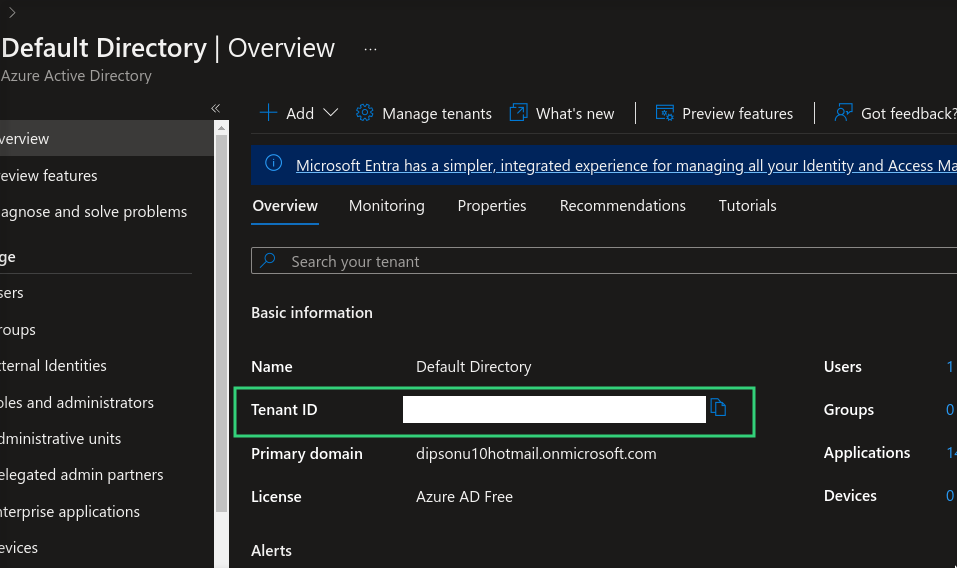

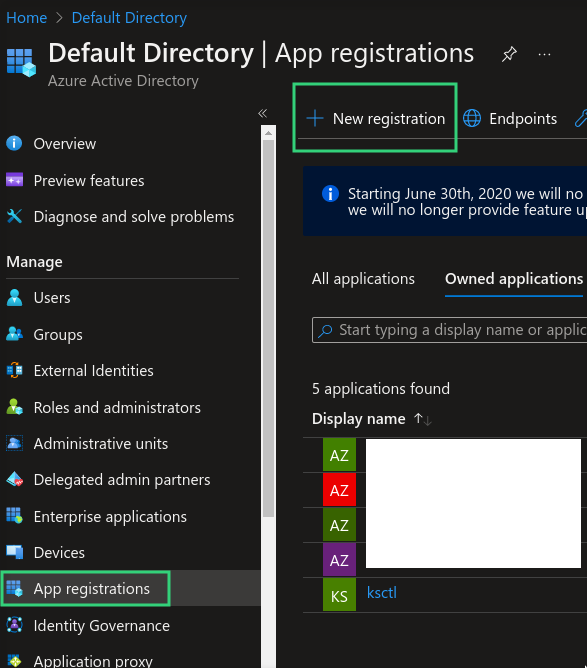

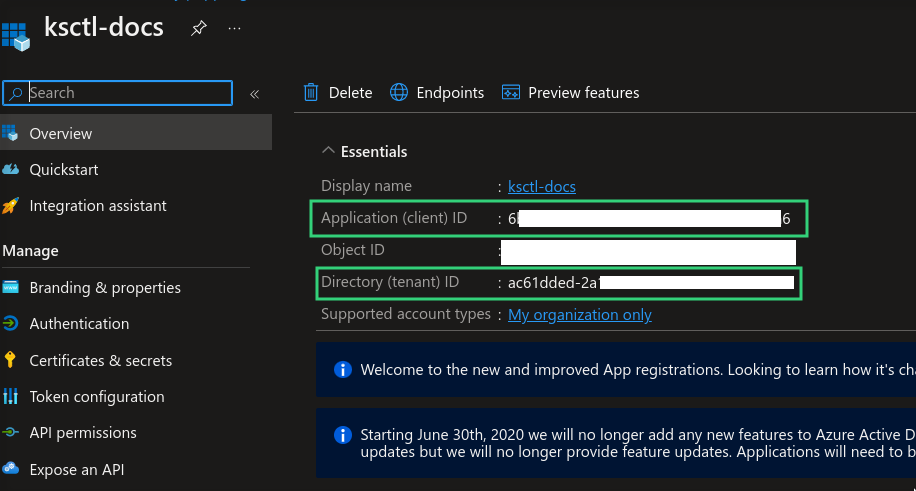

Client ID (Application ID)

Represents the identifier of your registered application.

Steps to create:

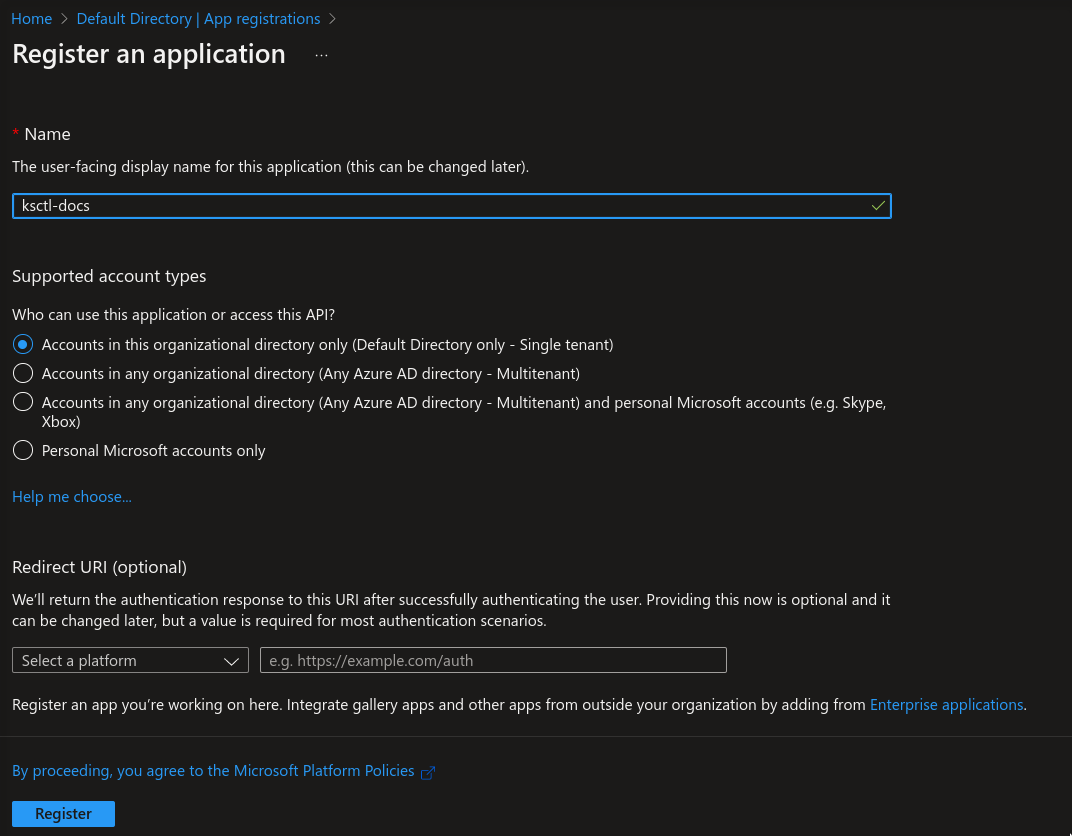

- Navigate to App Registrations

Register a new application

Obtain the Client ID

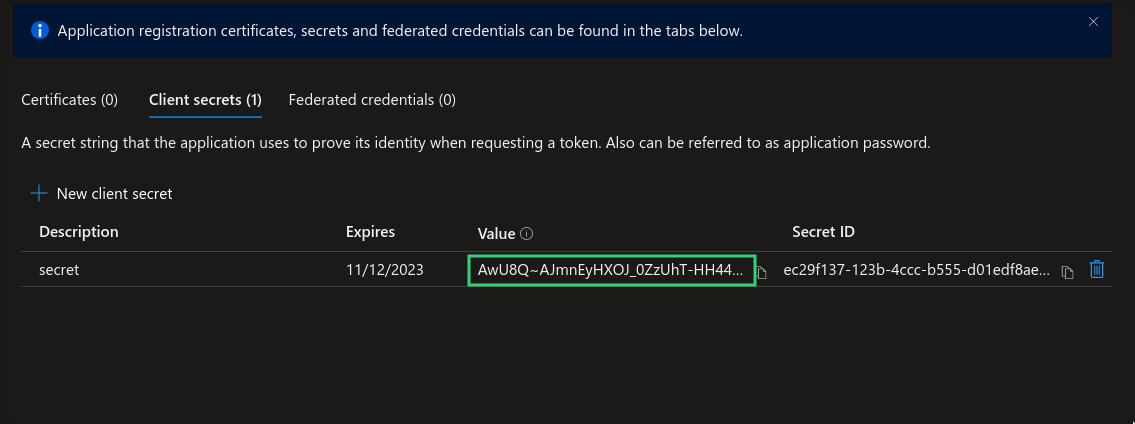

Client Secret

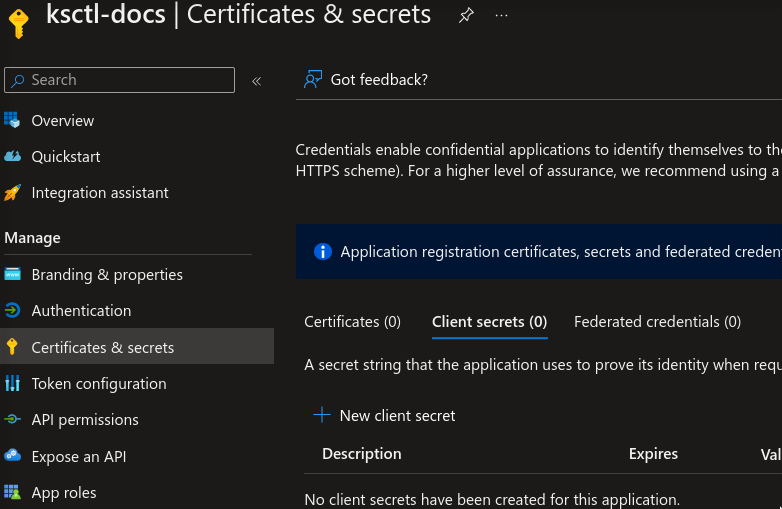

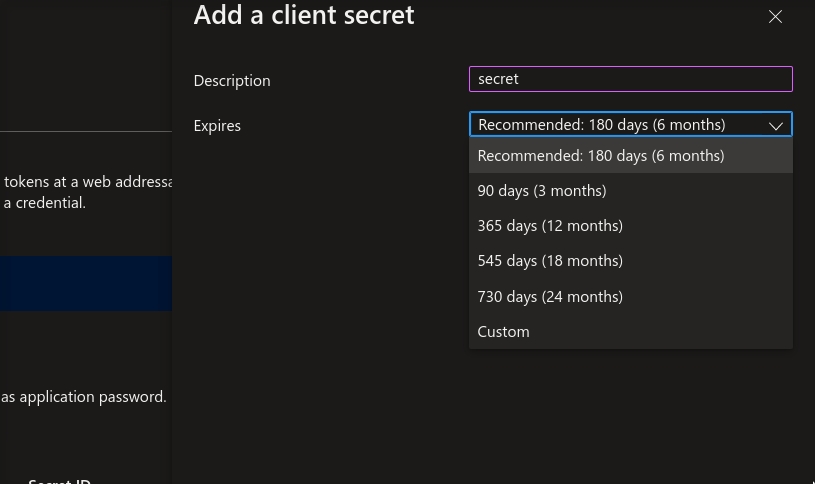

Authentication key for your registered application.

Steps to generate:

Access secret creation

Configure secret settings

Save the generated secret

Role Assignment

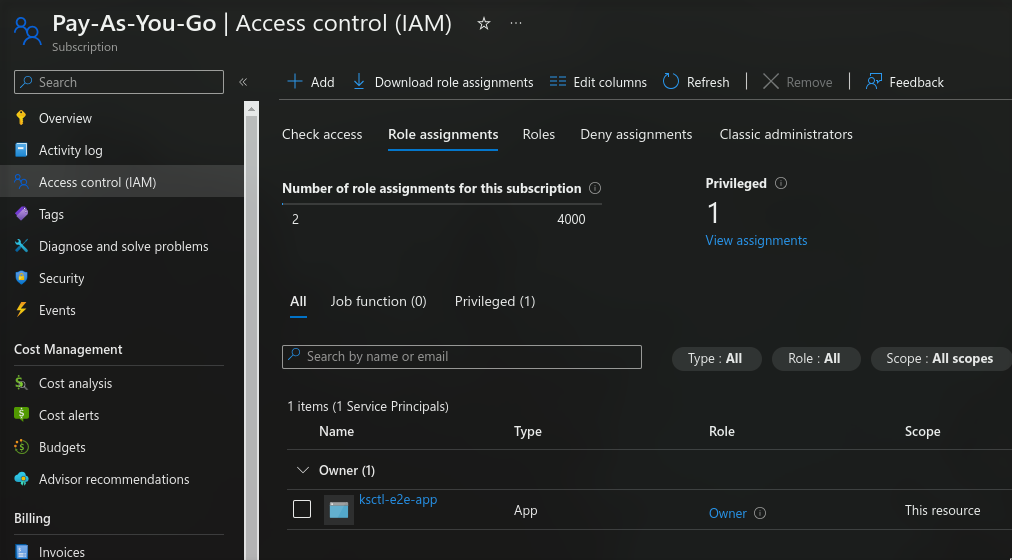

Configure application permissions:

- Navigate to Subscriptions > Access Control (IAM)

- Select “Role Assignment”

- Click “Add > Add Role Assignment”

- Create new role and specify the application name

- Configure desired permissions

You can create a custom Role and then attach to this app if you want fine grained permission Beta

{

"properties": {

"roleName": "Ksctl",

"description": "Kubmin.ksctl.com",

"assignableScopes": [

"/subscriptions/<subscription_id>"

],

"permissions": [

{

"actions": [

"Microsoft.ContainerService/register/action",

"Microsoft.ContainerService/unregister/action",

"Microsoft.ContainerService/operations/*",

"Microsoft.ContainerService/managedClusters/*",

"Microsoft.ContainerService/managedclustersnapshots/*",

"Microsoft.ContainerService/containerServices/*",

"Microsoft.ContainerService/deploymentSafeguards/*",

"Microsoft.ContainerService/locations/*",

"Microsoft.ContainerService/snapshots/*",

"Microsoft.Network/register/action",

"Microsoft.Network/unregister/action",

"Microsoft.Network/checkTrafficManagerNameAvailability/action",

"Microsoft.Network/internalNotify/action",

"Microsoft.Network/getDnsResourceReference/action",

"Microsoft.Network/queryExpressRoutePortsBandwidth/action",

"Microsoft.Network/checkFrontDoorNameAvailability/action",

"Microsoft.Network/privateDnsZonesInternal/action",

"Microsoft.Network/adminNetworkSecurityGroups/*",

"Microsoft.Network/applicationGateways/*",

"Microsoft.Network/ApplicationGatewayWebApplicationFirewallPolicies/*",

"Microsoft.Network/applicationGatewayAvailableRequestHeaders/*",

"Microsoft.Network/applicationGatewayAvailableResponseHeaders/*",

"Microsoft.Network/applicationGatewayAvailableServerVariables/*",

"Microsoft.Network/applicationGatewayAvailableSslOptions/*",

"Microsoft.Network/applicationGatewayAvailableWafRuleSets/read",

"Microsoft.Network/applicationSecurityGroups/*",

"Microsoft.Network/operations/*",

"Microsoft.Network/azurefirewalls/*",

"Microsoft.Network/azureFirewallFqdnTags/read",

"Microsoft.Network/dnsResolvers/*",

"Microsoft.Network/dnszones/*",

"Microsoft.Network/dnsoperationresults/*",

"Microsoft.Network/firewallPolicies/*",

"Microsoft.Network/gatewayLoadBalancerAliases/*",

"Microsoft.Network/ipAllocations/*",

"Microsoft.Network/ipGroups/*",

"Microsoft.Network/loadBalancers/*",

"Microsoft.Network/natGateways/*",

"Microsoft.Network/networkInterfaces/*",

"Microsoft.Network/virtualNetworks/*",

"Microsoft.Network/virtualNetworkGateways/read",

"Microsoft.Network/virtualNetworkGateways/write",

"Microsoft.Network/virtualNetworkGateways/delete",

"microsoft.network/virtualnetworkgateways/*",

"Microsoft.Network/virtualRouters/*",

"Microsoft.Resources/checkResourceName/action",

"Microsoft.Resources/changes/*",

"Microsoft.Resources/deployments/*",

"Microsoft.Resources/deploymentScripts/*",

"Microsoft.Resources/deploymentStacks/*",

"Microsoft.Resources/locations/*",

"Microsoft.Resources/providers/*",

"Microsoft.Resources/links/*",

"Microsoft.Resources/resources/*",

"Microsoft.Resources/subscriptions/*",

"Microsoft.Resources/subscriptions/locations/*",

"Microsoft.Resources/subscriptions/resourceGroups/*",

"Microsoft.Resources/subscriptions/providers/*",

"Microsoft.Resources/subscriptions/operationresults/*",

"Microsoft.Resources/subscriptions/resources/*",

"Microsoft.Resources/subscriptions/tagNames/*",

"Microsoft.Resources/subscriptionRegistrations/*",

"Microsoft.Resources/tags/*",

"Microsoft.Resources/templateSpecs/*",

"Microsoft.Resources/templateSpecs/versions/*",

"Microsoft.Resources/tenants/*",

"Microsoft.ManagedIdentity/register/*",

"Microsoft.ManagedIdentity/operations/*",

"Microsoft.ManagedIdentity/identities/*",

"Microsoft.ManagedIdentity/userAssignedIdentities/*"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

}

Authentication Methods

Command Line Interface

ksctl configure cloud

Available Cluster Types

Self-Managed Clusters

Self-managed clusters with the following components:

- Distributed etcd database instances

- HAProxy load balancer for control plane high availability

- Multiple control plane nodes

- Worker nodes

Bootstrap options:

- k3s (lightweight Kubernetes distribution)

- kubeadm (official Kubernetes bootstrap tool)

Azure Kubernetes Service (AKS)

Fully managed Kubernetes service by Azure.

Cluster Management Features

Cluster Operations

Managed Clusters (AKS)

- Create and delete operations

- Cluster switching

- Infrastructure updates currently not supported

High Availability Clusters

- Worker node scaling (add/remove)

- Secure SSH access to all components:

- Database nodes

- Load balancer

- Control plane nodes

- Worker nodes

- Protected by SSH key authentication

- Public access enabled

Looking for CLI Commands?

All CLI commands mentioned in this documentation have detailed explanations in our command reference guide.

CLI Reference

👉 Check out our comprehensive CLI Commands Reference for:

- Detailed command syntax

- Usage examples

- Available options and flags

- Common use cases

3.3 - Kind

It creates cluster on the host machine utilizing kind

Note

Prequisites: DockerCurrent features

currently using Kind Kubernetes in Docker

Looking for CLI Commands?

All CLI commands mentioned in this documentation have detailed explanations in our command reference guide.

CLI Reference

👉 Check out our comprehensive CLI Commands Reference for:

- Detailed command syntax

- Usage examples

- Available options and flags

- Common use cases

4 - Reference

The Below CLI Command Reference are mapped from ksctl/cli repo

CLI Command Reference

Docs are available now in cli repo Here are the links for the documentation files

Info

These cli commands are available with cli specific versions from [email protected] onwards v1.2.0 cli references4.1 - ksctl

ksctl

CLI tool for managing multiple K8s clusters

Synopsis

CLI tool which can manage multiple K8s clusters from local clusters to cloud provider specific clusters.

Options

--debug-cli Its used to run debug mode against cli's menudriven interface

-h, --help help for ksctl

-t, --toggle Help message for toggle

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

- ksctl completion - Generate shell completion scripts

- ksctl configure - Configure ksctl cli

- ksctl self-update - Use to update the ksctl cli

- ksctl version - ksctl version

Auto generated by spf13/cobra on 5-May-2025

4.2 - ksctl_cluster

ksctl cluster

Use to work with clusters

Synopsis

It is used to work with cluster

Examples

ksctl cluster --help

Options

-h, --help help for cluster

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl - CLI tool for managing multiple K8s clusters

- ksctl cluster addons - Use to work with addons

- ksctl cluster connect - Connect to existing cluster

- ksctl cluster create - Use to create a cluster

- ksctl cluster delete - Use to delete a cluster

- ksctl cluster get - Use to get the cluster

- ksctl cluster list - Use to list all the clusters

- ksctl cluster scaledown - Use to manually scaledown a selfmanaged cluster

- ksctl cluster scaleup - Use to manually scaleup a selfmanaged cluster

- ksctl cluster summary - Use to get summary of the created cluster

Auto generated by spf13/cobra on 5-May-2025

4.3 - ksctl_cluster_addons

ksctl cluster addons

Use to work with addons

Synopsis

It is used to work with addons

Examples

ksctl addons --help

Options

-h, --help help for addons

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

- ksctl cluster addons disable - Use to disable an addon

- ksctl cluster addons enable - Use to enable an addon

Auto generated by spf13/cobra on 5-May-2025

4.4 - ksctl_cluster_addons_disable

ksctl cluster addons disable

Use to disable an addon

Synopsis

It is used to disable an addon

ksctl cluster addons disable [flags]

Examples

ksctl addons disable --help

Options

-h, --help help for disable

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster addons - Use to work with addons

Auto generated by spf13/cobra on 5-May-2025

4.5 - ksctl_cluster_addons_enable

ksctl cluster addons enable

Use to enable an addon

Synopsis

It is used to enable an addon

ksctl cluster addons enable [flags]

Examples

ksctl addons enable --help

Options

-h, --help help for enable

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster addons - Use to work with addons

Auto generated by spf13/cobra on 5-May-2025

4.6 - ksctl_cluster_connect

ksctl cluster connect

Connect to existing cluster

Synopsis

It is used to connect to existing cluster

ksctl cluster connect [flags]

Examples

ksctl connect --help

Options

-h, --help help for connect

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.7 - ksctl_cluster_create

ksctl cluster create

Use to create a cluster

Synopsis

It is used to create cluster with the given name from user

ksctl cluster create [flags]

Examples

ksctl create --help

Options

-h, --help help for create

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.8 - ksctl_cluster_delete

ksctl cluster delete

Use to delete a cluster

Synopsis

It is used to delete cluster with the given name from user

ksctl cluster delete [flags]

Examples

ksctl delete --help

Options

-h, --help help for delete

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.9 - ksctl_cluster_get

ksctl cluster get

Use to get the cluster

Synopsis

It is used to get the cluster created by the user

ksctl cluster get [flags]

Examples

ksctl get --help

Options

-h, --help help for get

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.10 - ksctl_cluster_list

ksctl cluster list

Use to list all the clusters

Synopsis

It is used to list all the clusters created by the user

ksctl cluster list [flags]

Examples

ksctl list --help

Options

-h, --help help for list

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.11 - ksctl_cluster_scaledown

ksctl cluster scaledown

Use to manually scaledown a selfmanaged cluster

Synopsis

It is used to manually scaledown a selfmanaged cluster

ksctl cluster scaledown [flags]

Examples

ksctl update scaledown --help

Options

-h, --help help for scaledown

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.12 - ksctl_cluster_scaleup

ksctl cluster scaleup

Use to manually scaleup a selfmanaged cluster

Synopsis

It is used to manually scaleup a selfmanaged cluster

ksctl cluster scaleup [flags]

Examples

ksctl update scaleup --help

Options

-h, --help help for scaleup

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.13 - ksctl_cluster_summary

ksctl cluster summary

Use to get summary of the created cluster

Synopsis

It is used to get summary cluster

ksctl cluster summary [flags]

Examples

ksctl cluster summary --help

Options

-h, --help help for summary

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl cluster - Use to work with clusters

Auto generated by spf13/cobra on 5-May-2025

4.14 - ksctl_completion

ksctl completion

Generate shell completion scripts

Synopsis

To load completions:

Bash:

$ source <(ksctl completion bash)

To load completions for each session, execute once:

Linux:

$ ksctl completion bash > /etc/bash_completion.d/ksctl

macOS:

$ ksctl completion bash > /usr/local/etc/bash_completion.d/ksctl

Zsh:

$ echo “autoload -U compinit; compinit” » ~/.zshrc $ ksctl completion zsh > “${fpath[1]}/_ksctl”

Fish:

$ ksctl completion fish | source

To load completions for each session, execute once:

$ ksctl completion fish > ~/.config/fish/completions/ksctl.fish

ksctl completion [bash|zsh|fish] [flags]

Options

-h, --help help for completion

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl - CLI tool for managing multiple K8s clusters

Auto generated by spf13/cobra on 5-May-2025

4.15 - ksctl_configure

ksctl configure

Configure ksctl cli

Synopsis

It will display the current ksctl cli configuration

ksctl configure [flags]

Options

-h, --help help for configure

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl - CLI tool for managing multiple K8s clusters

- ksctl configure cloud - Configure cloud

- ksctl configure storage - Configure storage

- ksctl configure telemetry - Configure telemetry

Auto generated by spf13/cobra on 5-May-2025

4.16 - ksctl_configure_cloud

ksctl configure cloud

Configure cloud

Synopsis

It will help you to configure the cloud

ksctl configure cloud [flags]

Options

-h, --help help for cloud

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl configure - Configure ksctl cli

Auto generated by spf13/cobra on 5-May-2025

4.17 - ksctl_configure_storage

ksctl configure storage

Configure storage

Synopsis

It will help you to configure the storage

ksctl configure storage [flags]

Options

-h, --help help for storage

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl configure - Configure ksctl cli

Auto generated by spf13/cobra on 5-May-2025

4.18 - ksctl_configure_telemetry

ksctl configure telemetry

Configure telemetry

Synopsis

It will help you to configure the telemetry

ksctl configure telemetry [flags]

Options

-h, --help help for telemetry

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl configure - Configure ksctl cli

Auto generated by spf13/cobra on 5-May-2025

4.19 - ksctl_self-update

ksctl self-update

Use to update the ksctl cli

Synopsis

It is used to update the ksctl cli

ksctl self-update [flags]

Examples

ksctl self-update --help

Options

-h, --help help for self-update

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl - CLI tool for managing multiple K8s clusters

Auto generated by spf13/cobra on 5-May-2025

4.20 - ksctl_version

ksctl version

ksctl version

Synopsis

To get version for ksctl components

ksctl version [flags]

Examples

ksctl version --help

Options

-h, --help help for version

Options inherited from parent commands

--debug-cli Its used to run debug mode against cli's menudriven interface

-v, --verbose Enable verbose output

SEE ALSO

- ksctl - CLI tool for managing multiple K8s clusters

Auto generated by spf13/cobra on 5-May-2025

5 - Contribution Guidelines

You can do almost all the tests in your local except e2e tests which requires you to provide cloud credentials

Provide a generic tasks for new and existing contributors

Types of changes

There are many ways to contribute to the ksctl project. Here are a few examples:

- New changes to docs: You can contribute by writing new documentation, fixing typos, or improving the clarity of existing documentation.

- New features: You can contribute by proposing new features, implementing new features, or fixing bugs.

- Cloud support: You can contribute by adding support for new cloud providers.

- Kubernetes distribution support: You can contribute by adding support for new Kubernetes distributions.

Phases a change / feature goes through

- Raise a issue regarding it (used for prioritizing)

- what all changes does it demands

- if all goes well you will be assigned

- If its about adding Cloud Support then usages of CloudFactory is needed and sperate the logic of vm, firewall, etc. to their respective files and do have a helper file for behind the scenes logic for ease of use

- If its about adding Distribution support do check its compatability with different cloud providers vm configs and firewall rules which needs to be done

Formating for PR & Issue subject line

Subject / Title

# Releated to enhancement

enhancement: <Title>

# Related to feature

feat: <Title>

# Related to Bug fix or other types of fixes

fix: <Title>

# Related to update

update: <Title>

Body

Follow the PR or Issue template add all the significant changes to the PR description

Commit messages

mention the detailed description in the git commits. what? why? How?

Each commit must be sign-off and should follow conventional commit guidelines.

Conventional Commits

The commit message should be structured as follows:

<type>(optional scope): <description>

[optional body]

[optional footer(s)]

For more detailed information on conventional commits, you can refer to the official Conventional Commits specification.

Sign-off

Each commit must be signed-off. You can do this by adding a sign-off line to your commit messages. When committing changes in your local branch, add the -S flag to the git commit command:

$ git commit -S -m "YOUR_COMMIT_MESSAGE"

# Creates a signed commit

You can find more comprehensive details on how to sign off git commits by referring to the GitHub section on signing commits.

Pre Commit Hooks

pip install pre-commit

pre-commit install

Verification of Commit Signatures

You have the option to sign commits and tags locally, which adds a layer of assurance regarding the origin of your changes. GitHub designates commits or tags as either “Verified” or “Partially verified” if they possess a GPG, SSH, or S/MIME signature that is cryptographically valid.

GPG Commit Signature Verification

To sign commits using GPG and ensure their verification on GitHub, adhere to these steps:

- Check for existing GPG keys.

- Generate a new GPG key.

- Add the GPG key to your GitHub account.

- Inform Git about your signing key.

- Proceed to sign commits.

SSH Commit Signature Verification

To sign commits using SSH and ensure their verification on GitHub, follow these steps:

- Check for existing SSH keys.

- Generate a new SSH key.

- Add an SSH signing key to your GitHub account.

- Inform Git about your signing key.

- Proceed to sign commits.

S/MIME Commit Signature Verification

To sign commits using S/MIME and ensure their verification on GitHub, follow these steps:

- Inform Git about your signing key.

- Proceed to sign commits.

For more detailed instructions, refer to GitHub’s documentation on commit signature verification

Development

First you have to fork the ksctl repository. fork

cd <path> # to you directory where you want to clone ksctl

mkdir <directory name> # create a directory

cd <directory name> # go inside the directory

git clone https://github.com/${YOUR_GITHUB_USERNAME}/ksctl.git # clone you fork repository

cd ksctl # go inside the ksctl directory

git remote add upstream https://github.com/ksctl/ksctl.git # set upstream

git remote set-url --push upstream no_push # no push to upstream

Trying out code changes

Before submitting a code change, it is important to test your changes thoroughly. You can do this by running the unit tests and integration tests.

Submitting changes

Once you have tested your changes, you can submit them to the ksctl project by creating a pull request. Make sure you use the provided PR template

Getting help

If you need help contributing to the ksctl project, you can ask for help on the kubesimplify Discord server, ksctl-cli channel or else raise issue or discussion

Thank you for contributing!

We appreciate your contributions to the ksctl project!

Some of our contributors ksctl contributors To learn about ksctl governance, see our community governance document.

5.1 - Contribution Guidelines for CLI

Repository: ksctl/cli

How to Build from source

Linux

make install_linux # for linux

Mac OS

make install_macos # for macos

5.2 - Contribution Guidelines for Core

Repository: ksctl/ksctl

Test out both all Unit tests

make unit_test

Test out both all integeration_test

make integration_test

Test out both unit tests and integeration tests

make test_all

for E2E tests on local

set the required token as ENV vars

For cloud provider specific e2e tests

token for Azure

export AZURE_SUBSCRIPTION_ID=""

export AZURE_TENANT_ID=""

export AZURE_CLIENT_ID=""

export AZURE_CLIENT_SECRET=""

token for AWS

export AWS_ACCESS_KEY_ID=""

export AWS_SECRET_ACCESS_KEY=""

token for Mongodb as storage

export MONGODB_SRV=<true|false> # boolean

export MONGODB_HOST=""

export MONGODB_PORT=""

export MONGODB_USER=""

export MONGODB_PASS=""

cd test/e2e

# then the syntax for running

go run . -op create -file azure/create.json

# for operations you can refer file test/e2e/consts.go

5.3 - Contribution Guidelines for Docs

Repository: ksctl/docs

How to Build from source

# Prequisites

npm install -D postcss

npm install -D postcss-cli

npm install -D autoprefixer

npm install hugo-extended

Install Dependencies

hugo serve

6 - Concepts

This section will help you to learn about the underlying system of Ksctl. It will help you to obtain a deeper understanding of how Ksctl works.

📐 Architecture

Here is the entire Ksctl system level design

Sequence diagrams for 2 major operations

Create Cloud-Managed Clusters

sequenceDiagram

participant cm as Manager Cluster Managed

participant cc as Provision Controller

cm->>cc: transfers specs from user or machine

cc->>cc: to create the cloud infra (network, subnet, firewall, cluster)

cc->>cm: 'kubeconfig' and other cluster access to the state

cc->>cm: status of creationCreate Self-Managed HA clusters

sequenceDiagram

participant csm as Manager Cluster Self-Managed

participant cc as Provision Controller

participant bc as Bootstrap Controller

csm->>cc: transfers specs from user or machine

cc->>cc: to create the cloud infra (network, subnet, firewall, vms)

cc->>csm: return state to be used by BootstrapController

csm->>bc: transfers infra state like ssh key, pub IPs, etc

bc->>bc: bootstrap the infra by either (k3s or kubeadm)

bc->>csm: 'kubeconfig' and other cluster access to the state

bc->>csm: status of creation6.1 - Cluster Summary and Recommendation

Cluster Summary and Recommendation

Ksctl provides you a comprehensive summary of your cluster’s configuration and provides both:

- debugging information in entire cluster scope

- recommendation in terms of security

Note

This feature is available in Ksctl v2.7+.

Please note this command will work out of the box for any cluster created by ksctl v2.X+

Also Note this Operation is Read heavy make sure you run this command when you are not doing any heavy operations on the cluster.

Here is the demo on how it looks

Why Cluster Summary?

It will give users access to the important details of their cluster with just a single command $ ksctl cluster summary

It will help users with the following:

- Debugging: It will help users to debug their cluster in a single command. From:

- Errors in Pods failing,

- metrics from server to know directly what is wrong with the cluster.

- Cluster Events

- Latency: It gives you report of what is the latency between you and the cluster

- Recommendation: It gives remediation of problems it finds in the cluster. We are currently looking for these problems as of now:

- PodSecurityPolicy: It will check if the PodSecurityPolicy is enabled or not. If not, it will give a recommendation to enable it.

- NamespaceQuota: It will check if the NamespaceQuota is enabled or not. If not, it will give a recommendation to enable it.

- PodResourceLimits & Requests: It will check if the PodResourceLimits & Requests are enabled or not. If not, it will give a recommendation to enable it.

- NodePort: It will check if the NodePort is enabled or not. If not, it will give a recommendation to remove them.

How to use it?

This subcommand got introduced in Ksctl v2.7. You can use it by running the following command for any existing cluster:

$ ksctl cluster summary

6.2 - Smart Cost & Emission Optimization

Smart Cost & Emission Optimization

Ksctl provides intelligent optimization features that help you minimize infrastructure costs and environmental impact when deploying Kubernetes clusters. These features ensure that your clusters run in both the most cost-effective and environmentally friendly configurations.

Ksctl Optimizer (KO) (v2.4+)

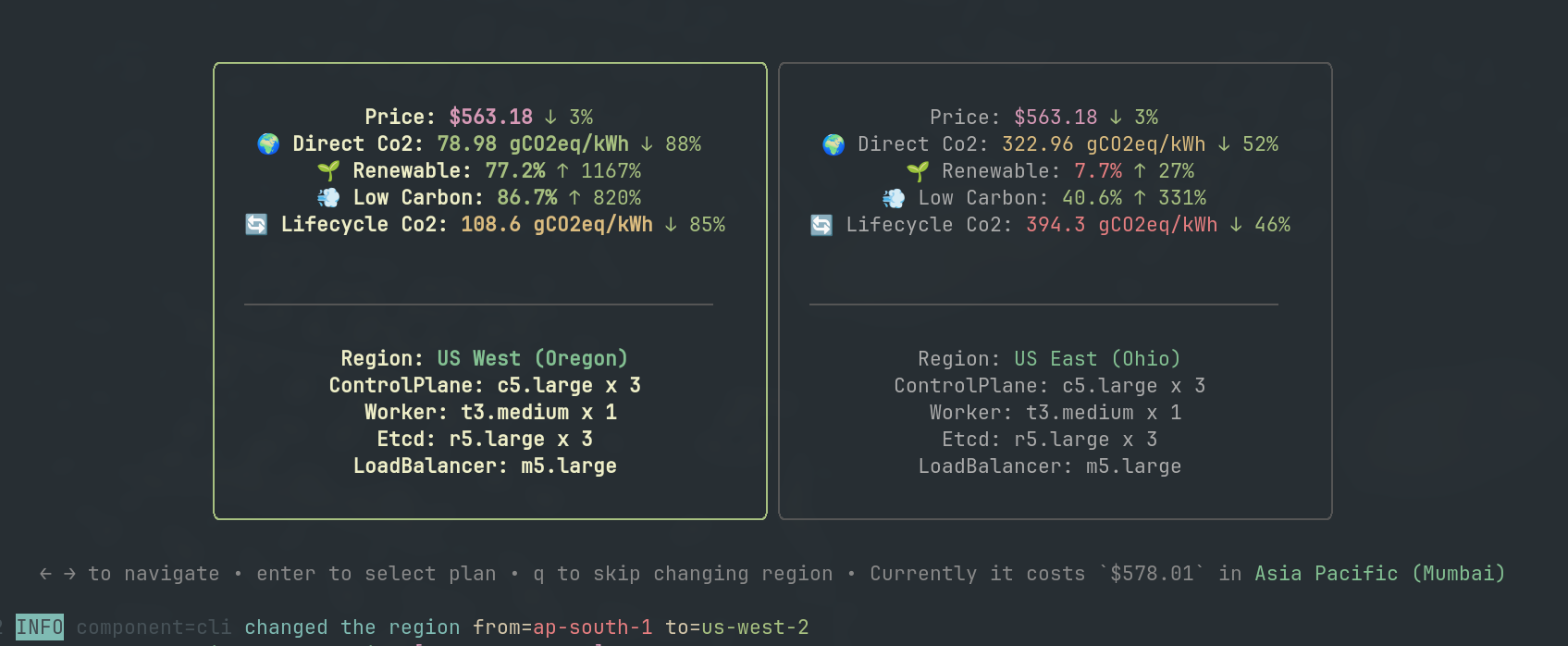

Introduced in Ksctl v2.4, KO optimizes costs and emissions by intelligently selecting the most efficient region for your Kubernetes clusters without changing your instance type.

Key Benefits

- 🚀 Automatic Region Optimization: Ksctl intelligently identifies and switches to the most cost-effective and environmentally friendly regions.

- 🛠️ Flexible Region Control: You can opt to keep your cluster in your preferred region if needed.

- 💰 Cost Savings: Dynamically adapts to pricing changes across regions, reducing your infrastructure expenses.

- 🌱 Eco-Friendly Operations: Minimizes carbon footprint through smart region selection.

How It Works

It evaluates regions based on multiple metrics to determine the optimal location for your Kubernetes clusters:

- Direct Carbon Intensity (Lower is better): Measures the carbon emissions from energy production.

- LCA Carbon Intensity (Lower is better): Evaluates the lifecycle carbon emissions of energy sources.

- Renewable Power Percentage (Higher is better): Highlights regions with higher renewable energy usage.

- Low CO₂ Power Percentage (Higher is better): Focuses on regions with a lower share of carbon-intensive power.

Visualization of dynamic region switching optimization

Visualization of dynamic region switching optimization

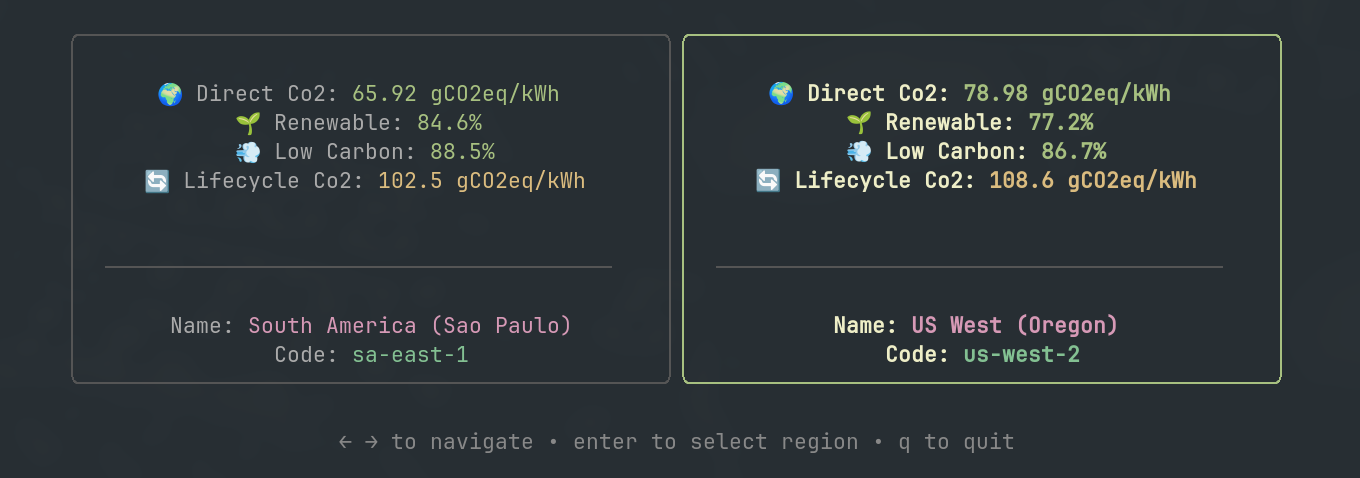

Ksctl Sustainability Metrics (KSM) (v2.5+)

Introduced in Ksctl v2.5, this feature enhances the optimization capabilities through a card-based selection interface that helps you choose the best region and instance type for your Kubernetes clusters based on your specific requirements.

Smart Region Selection

This feature ranks regions based on emission optimization metrics, ensuring that your Kubernetes clusters are not only cost-effective but also environmentally friendly.

Key Benefits

- 🌱 Minimizes carbon footprint by prioritizing regions with lower emissions.

- 🚀 Automatically ranks regions using advanced emission metrics.

How It Works

The Smart Region Selection evaluates and ranks regions based on the same comprehensive emission metrics used in Dynamic Region Switching:

- Direct Carbon Intensity

- LCA Carbon Intensity

- Renewable Power Percentage

- Low CO₂ Power Percentage

Visualization of region selection optimization based on emission metrics

Visualization of region selection optimization based on emission metrics

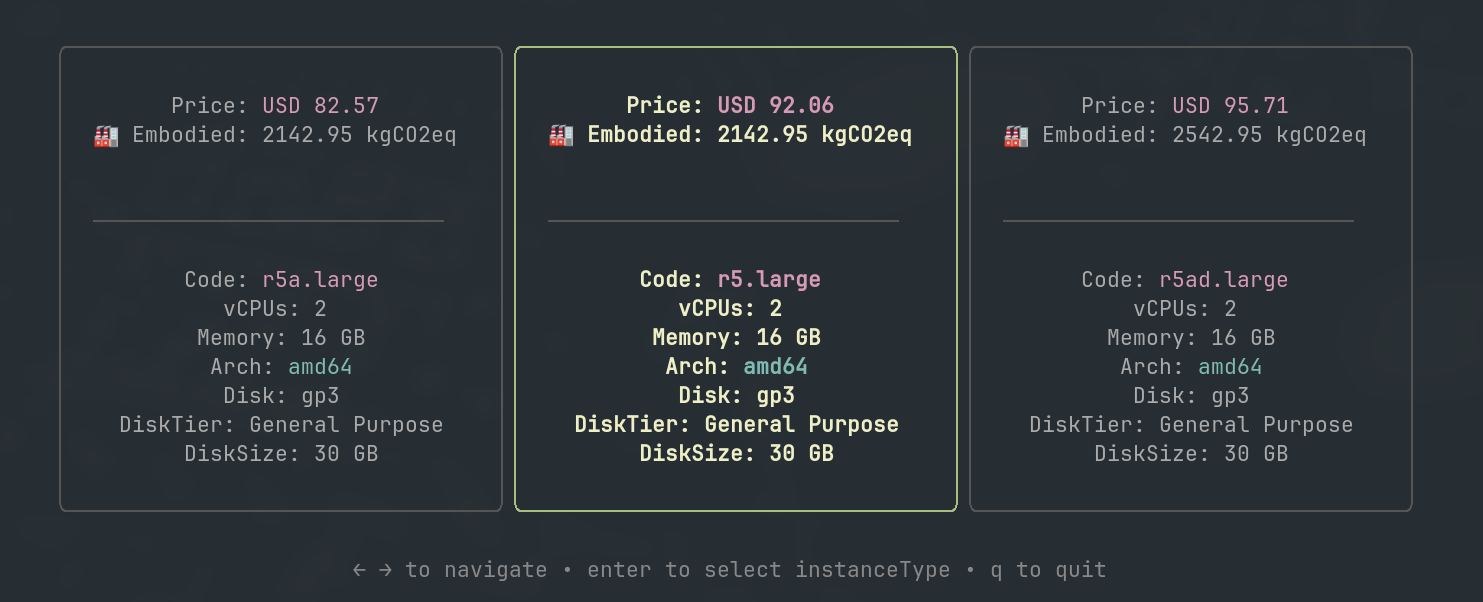

Smart Instance Type Selection

This feature helps you choose the most efficient instance type based on both cost and embodied emissions, ensuring that your Kubernetes clusters are optimized for efficiency and sustainability.

Key Benefits

- 💰 Cost Optimization: Adapts to pricing changes across instance types to reduce infrastructure costs.

- 🌱 Sustainability Focus: Prioritizes instance types with lower embodied emissions, minimizing your carbon footprint.

How It Works

The Smart Instance Type Selection evaluates and ranks instance types based on:

- Cost Efficiency: Analyzes and compares pricing across different instance types.

- Embodied Emissions: Evaluates the environmental impact of manufacturing and deploying each instance type.

Visualization of instance type selection optimization based on cost and embodied emissions

Visualization of instance type selection optimization based on cost and embodied emissions

Using the Optimization Features

These optimization features are integrated with the ksctl cluster create command and work automatically when you create a new Kubernetes cluster.

When you run the cluster creation command, Ksctl will:

- Analyze available regions and instance types

- Evaluate cost and emission metrics for each option

- Present optimized recommendations through an intuitive interface

- Allow you to select the best options for your specific needs

This ensures you get maximum cost efficiency and minimum emissions while maintaining performance and reliability for all your Kubernetes deployments.

Upgrading to Access These Features

To access these optimization features, make sure you’re using Ksctl v2.4 or above by running:

ksctl self-update

This will ensure you have the latest version with all the optimization capabilities described in this document.

6.3 - Cloud Controller

It is responsible for controlling the sequence of tasks for every cloud provider to be executed

6.4 - Core functionalities

Basic cluster operations

Create

- HA self-managed cluster (VM is provisioned and ssh into and configure them just like ansible)

- Managed (cloud provider creates the clusters and we get the kubeconfig in return)

Delete

- HA self managed cluster

- Managed cluster

Scaleup

- Only for ha cluster as the user has manual ability to increase the number of worknodes

- Example: if workernode 1 then it will create 2 then 3…

Scaledown

- Only for ha cluster as the user has manual ability to decrease the number of worknodes

- Example: if workernodes are 1, 2 then it will delete from the last to first aka 2 then 1

Switch

- It will just use the request from the user to get the kubeconfig from specific cluster and save to specific folder that is ~/.ksctl/kubeconfig

Get

- Both ha and manage cluster it will search folders in specific directory to get what all cluster have been created for specific provider

Example: Here for get request of azure it will scan the directory .ksctl/state/azure/ha and for managed as well to get all the folder names

6.5 - Core Manager

It is responsible for managing client requests and calls the corresponding controller

Types

ManagerClusterKsctl

Role: Perform ksctl getCluster, switchCluster

ManagerClusterKubernetes

Role: Perform ksctl addApplicationAndCrds

Currently to be used by machine to machine not by ksctl cli

ManagerClusterManaged

Role: Perform ksctl createCluster, deleteCluster

ManagerClusterSelfManaged

Role: Perform ksctl createCluster, deleteCluster, addWorkerNodes, delWorkerNodes

6.6 - Distribution Controller

It is responsible for controlling the execution sequence for configuring Cloud Resources wrt to the Kubernetes distribution choosen

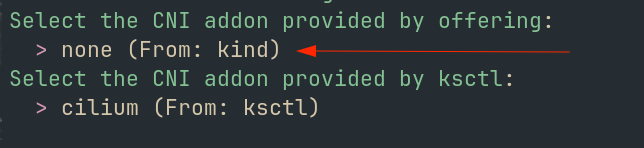

7 - Container Network Interface (CNI)

Cilium and Flannel for self managed clusters

For Cloud managed cluster it varies based on the provider.

Pre-requisites

You get to choose Ksctl CNI when you choose none from the main provider :

- Cloud Managed Cluster Provider (aks, eks, kind) Or

- Kubernetes Bootstrap Provider (k3s, kubeadm)

For example,

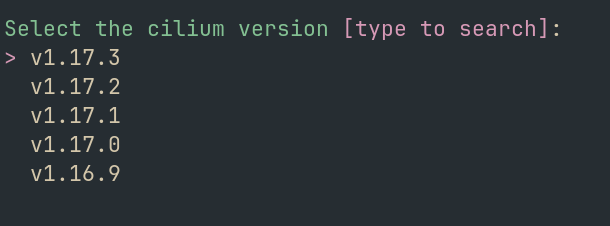

7.1 - Cilium

Cilium is a CNI plugin for Kubernetes that provides advanced networking and security features. It uses eBPF (extended Berkeley Packet Filter) technology to enable high-performance networking, load balancing, and security policies at the kernel level.

Note

This Docs is only Cilium provided by Ksctl

You can only choose CNI plugin during the cluster creation process.

Version selection

Default: latest

Now you can choose the Cilium version you want to install.

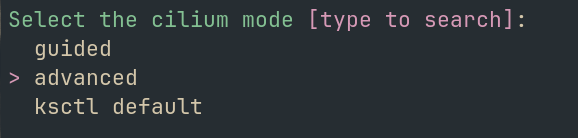

Cilium configuration

You can customize the Cilium Configuration. 3 modes are available:

- Default: It represents opinionated configuration for Cilium by Ksctl Team.

1hubble:

2 ui:

3 enabled: true

4 relay:

5 enabled: true

6 metrics:

7 enabled:

8 - dns

9 - drop

10 - tcp

11 - flow

12 - port-distribution

13 - icmp

14 - httpV2:exemplars=true;labelsContext=source_ip,source_namespace,source_workload,destination_ip,destination_namespace,destination_workload,traffic_direction

15

16l7Proxy: true

17kubeProxyReplacement: true

18

19encryption:

20 enabled: true

21 type: wireguard

22

23operator:

24 replicas: 3

25 prometheus:

26 enabled: true

27

28prometheus:

29 enabled: true

- Advance: You can specify the helm chart

values.ymlfile to customize the Cilium configuration. it will open a text editor based on your terminal ENV$EDITORif not set it will usevimas default. Refer Helm Chart Values

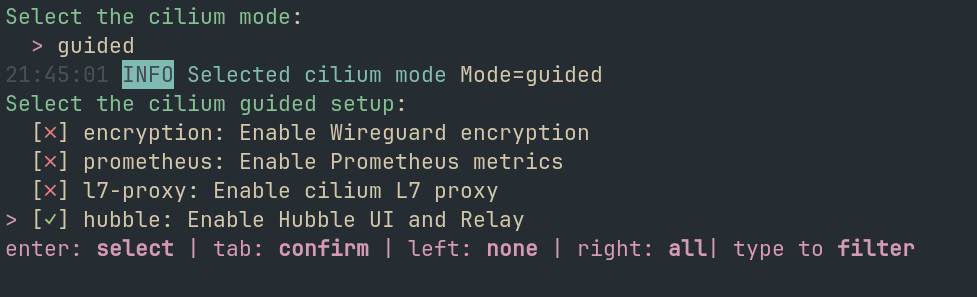

- Guided: It will provide you our preconfigured options of what all specific omponents to Enable/Disable

7.2 - Flannel

Flannel is a CNI plugin for Kubernetes that provides a simple and efficient way to manage networking between containers. It creates an overlay network that allows containers to communicate with each other across different hosts, making it easier to deploy and scale applications in a Kubernetes cluster.

Note

This Docs is only Flannel provided by Ksctl

You can only choose CNI plugin during the cluster creation process.

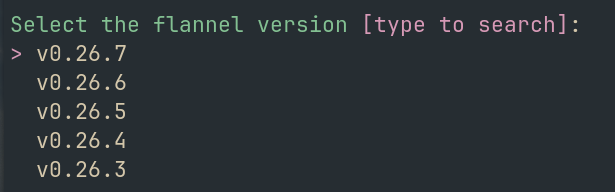

Version selection

Default: latest

Now you can choose the Flannel version you want to install.

8 - Contributors

Sponsors

| Name | How |

|---|---|

| Azure | Azure Open Source Program Office

|

| Civo | Provided Us with credits to run and test our project and were the first cloud provider we supported. |

Communities

| Name | Social Mentions |

|---|---|

| Kubernetes Architect |

|

| WeMakeDevs HacktoberFest | Mentioned our project in their Hacktoberfest event. Youtube Link |

| Kubesimplify Community | We started from here and got a lot of support. Some of the mentions Youtube Link, Tweet etc. |

9 - Faq

General

What is ksctl?

Ksctl is a lightweight, easy-to-use tool that simplifies the process of managing Kubernetes clusters. It provides a unified interface for common cluster operations like create, delete, scaleup and down, and is designed to be simple, efficient, and developer-friendly.

What can I do with ksctl?

With ksctl, you can deploy Kubernetes clusters across any cloud provider, switch between providers seamlessly, and choose between managed and self-managed HA clusters. You can deploy clusters with a single command, without any complex configuration, and manage them with a unified interface that eliminates the need for provider-specific CLIs.

How does ksctl simplify cluster management?

Ksctl simplifies cluster management by providing a streamlined interface for common cluster operations like create, delete, scaleup and down. It eliminates the need for complex configuration and provider-specific CLIs, and provides a consistent experience across environments. With ksctl, developers can focus on building great applications without getting bogged down by the complexities of cluster management.

Who is ksctl for?

Ksctl is designed for developers, DevOps engineers, and anyone who needs to manage Kubernetes clusters. It is ideal for teams of all skill levels, from beginners to experts, and provides a simple, efficient, and developer-friendly way to deploy and manage clusters.

How does ksctl differ from other cluster management tools?

Ksctl is a lightweight, easy-to-use tool that simplifies the process of managing Kubernetes clusters. It provides a unified interface for common cluster operations like create, delete, scaleup and down, and is designed to be simple, efficient, and developer-friendly. Ksctl is not a full-fledged platform like Rancher, but rather a simple CLI tool that provides a streamlined interface for common cluster operations.

Is it production-ready?

No, the level of highly customization is missing in terms of cloud resources, ssh ports are open to public internet (self managed cluster) though authentication via ssh keypair are there. It recommended to be used by developers for development and testing purposes and get familiar with the Kubernetes Ecosystem

Comparisons

Ksctl vs Cluster API

- Simplicity vs Complexity: Cluster API uses a sophisticated set of CRDs (Custom Resource Definitions) to manage machines, machine sets, and deployments. In contrast, Ksctl adopts a minimalist approach, focusing on reducing complexity for developers and operators.

- Target Audience: Ksctl caters to users seeking a lightweight, user-friendly tool for quick cluster management tasks, particularly in development and testing environments. Cluster API is designed for production-grade use cases, emphasizing flexibility and integration with Kubernetes’ declarative model.

- Dependencies: Ksctl is a standalone CLI tool that does not require a running Kubernetes cluster, making it easy to set up and run anywhere. On the other hand, Cluster API requires a pre-existing Kubernetes cluster to operate.

- Feature Focus: Ksctl emphasizes speed and simplicity in managing cluster lifecycle operations (create, delete, scale). Cluster API provides deeper control and automation features suitable for enterprises managing complex Kubernetes ecosystems.

What is the difference between Ksctl and k3sup?

- Scope: Ksctl is a comprehensive tool for managing Kubernetes clusters across multiple environments from cloud managed Kubernetes flavour to K3s and kubeadm. K3sup, on the other hand, focuses primarily on bootstrapping lightweight k3s clusters.

- Features: Ksctl handles infrastructure provisioning, cluster scaling, and cloud-agnostic lifecycle management, whereas k3sup is limited to installing k3s clusters without managing the underlying infrastructure.

- Cloud Support: Ksctl provides a unified interface for managing clusters across different providers, making it suitable for multi-cloud strategies. K3sup is more limited and designed for standalone setups.

How does Ksctl compare to Rancher?

- Tool vs Platform: Ksctl is a streamlined CLI tool for cluster management. Rancher, by contrast, is a feature-rich platform offering cluster governance, monitoring, access control, and application management.

- Use Case: Ksctl is lightweight and ideal for developers needing quick, uncomplicated cluster management. Rancher is tailored for enterprise environments where centralized management and control of multiple clusters are essential.

- Operational Scope: Ksctl focuses on basic lifecycle operations (create, delete, scale). Rancher includes features like Helm chart deployment, RBAC integration, and advanced workload management.

What is the difference between Ksctl and k3d, Kind, or Minikube?

- Environment Scope: Ksctl is designed for both local and cloud-based Kubernetes cluster management. Tools like k3d, Kind, and Minikube are primarily for local development and testing purposes.

- Cluster Management: Ksctl can provision, scale, and delete clusters in cloud environments, whereas k3d, Kind, and Minikube focus on providing lightweight clusters for experimentation and local development.

- Infrastructure Management: Ksctl integrates with infrastructure provisioning, while the others rely on pre-existing local environments (e.g., Docker for k3d and Kind, or virtual machines for Minikube).

How does Ksctl compare to eksctl?

- Cloud Support: Ksctl is cloud-agnostic and supports multiple providers, making it suitable for multi-cloud setups. Eksctl, on the other hand, is tightly coupled with AWS and designed exclusively for managing EKS clusters.

- Features: Ksctl provides an all-in-one tool for provisioning infrastructure, managing the cluster lifecycle, and scaling across different environments. Eksctl is focused on streamlining EKS setup and optimizing AWS integrations like IAM, VPCs, and Load Balancers.

- Target Audience: Ksctl appeals to users seeking a flexible, multi-cloud solution. Eksctl is ideal for AWS-centric teams that require deep integration with AWS services.

10 - Features

Our Vision

Transform your Kubernetes experience with a tool that puts simplicity and efficiency first. Ksctl eliminates the complexity of cluster management, allowing developers to focus on what matters most – building great applications.

Key Features

🌐 Universal Cloud Support

- Deploy clusters across any cloud provider

- Seamless switching between providers

- Support for both managed and self-managed clusters

- Freedom to choose your bootstrap provider (K3s or Kubeadm)

🚀 Zero-to-Cluster Simplicity

- Single command cluster deployment

- No complex configuration required

- Automated setup and initialization

- Instant development environment readiness

- Local file-based or MongoDB storage options

- Single binary deployment thus light-weight and efficient

🛠️ Streamlined Management

- Unified interface for all operations

- Eliminates need for provider-specific CLIs

- Consistent experience across environments

- Simplified scaling and upgrades

🌱 Smart Cost & Emission Optimization

- Dynamic Region Switching for optimal cost efficiency

- Smart Region Selection based on emission metrics

- Intelligent Instance Type Selection balancing cost and sustainability

- Automatic ranking of regions based on carbon intensity metrics

- Eco-friendly operations with focus on renewable energy usage

- No sacrifice of performance or reliability For Details

🎯 Developer-Focused Design

- Near-zero learning curve

- Intuitive command structure

- No new configurations to learn

- Perfect for teams of all skill levels

- We have WASM workload support as well Refer

🔄 Flexible Operation

- Self-managed cluster support

- Cloud provider managed offerings

- Multiple bootstrap provider options

- Seamless environment transitions

Technical Benefits

- Infrastructure Agnostic: Deploy anywhere, manage consistently

- Rapid Deployment: Bypass complex setup steps and day 0 tasks

- Future-Ready: Upcoming support for day 1 operations and Wasm

- Community-Driven: Active development and continuous improvements

- Sustainability-Focused: Optimized for both cost efficiency and environmental impact

11 - Ksctl Cluster Management

Ksctl Cluster Management used for ksctl based cluster management.

Changelog

v0.2.0

- added support for

stackaka ksctl/ka repo

Caution

Ensure that all resources installed via the addon are completely removed before proceeding with the uninstallation.

For example, if you have installed the stack addon and created a stack named gitops-standard, you must delete the gitops-standard stack before uninstalling the stack addon.

11.1 - Ksctl Stack

It helps in deploying stack using crd to help manage with installation, upgrades, downgrades, uninstallaztion. from one version to another and provide a single place of truth where to look for which applications are installed

How to Install?

ksctl/kcm is a pre-requisite for this to work

apiVersion: manage.ksctl.com/v1

kind: ClusterAddon

metadata:

labels:

app.kubernetes.io/name: kcm

name: ksctl-stack

spec:

addons:

- name: stack

Changelog

v0.2.0

- added support for

gitops-standardstack - added support for

monitoring-litestack - added support for

service-mesh-standardstack - added support for

wasm-spinkube-standardstack - added support for

wasm-kwasm-plusstack

Types

Stack

For defining a hetrogenous components we came up with a stack which contains M number of components which are different applications with their versions

Info

this is current available on all clusters created from[email protected] and utilizes [email protected]Note

It has a dependency onkcm as it helps in managing its lifecycleAbout Lifecycle of application stack

once you have kubectl apply the stack it will start deploying the applications in the stack.

If you want to upgrade the applications in the stack you can edit the stack and change the version of the application and apply the stack.

Supported Apps and CNI

| Name | Type | More Info |

|---|---|---|

| GitOps | standard | Link |

| Monitoring | lite | Link |

| Service Mesh | standard | Link |

| SpinKube | standard | Link |

| Kwasm | plus | Link |

Note on wasm category apps

Only one of the app under the category Kwasm, SpinKube can be installed at a time we you might need to uninstall one to get another running

also the current implementation of the wasm catorgoty apps annotate all the nodes with kwasm as true

Components in Stack

All the stack are a collection of components so when you are overriding the stack values you need to tell which component it belongs to and then specifiy the value in amap[string]any formatGitOps-Standard

How to use it (Basic Usage)

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: gitops

spec:

stackName: "gitops-standard"

Overrides available

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: gitops

spec:

stackName: "gitops-standard"

disableComponents: <list[str]> # list of components to disable accepeted values are argocd, argorollouts

overrides:

argocd:

version: <string> # version of the argocd

noUI: <bool> # to disable the UI

namespace: <string> # namespace to install argocd

namespaceInstall: <bool> # to install namespace specific argocd

argorollouts:

version: <string> # version of the argorollouts

namespace: <string> # namespace to install argrollouts

namespaceInstall: <bool> # to install namespace specific argorollouts

Monitoring-Lite

How to use it (Basic Usage)

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: monitoring

spec:

stackName: "monitoring-lite"

Overrides available

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: monitoring

spec:

stackName: "monitoring-lite"

disableComponents: <list[str]> # list of components to disable accepeted values are kube-prometheus

overrides:

kube-prometheus:

version: <string> # version of the kube-prometheus

helmKubePromChartOverridings: <map[string]any> # helm chart overridings, kube-prometheus

Service-Mesh-Standard

How to use it (Basic Usage)

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: mesh

spec:

stackName: "mesh-standard"

Overrides available

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: mesh

spec:

stackName: "mesh-standard"

disableComponents: <list[str]> # list of components to disable accepeted values are istio

overrides:

istio:

version: <string> # version of the istio

helmBaseChartOverridings: <map[string]any> # helm chart overridings, istio/base

helmIstiodChartOverridings: <map[string]any> # helm chart overridings, istio/istiod

Wasm Spinkube-standard

How to use it (Basic Usage)

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: spinkube

spec:

stackName: "wasm/spinkube-standard"

Demo app

kubectl apply -f https://raw.githubusercontent.com/spinkube/spin-operator/main/config/samples/simple.yaml

kubectl port-forward svc/simple-spinapp 8083:80

curl localhost:8083/hello

Overrides available

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: spinkube

spec:

stackName: "wasm/spinkube-standard"

disableComponents: <list[str]> # list of components to disable accepeted values are spinkube-operator, spinkube-operator-shim-executor, spinkube-operator-crd, cert-manager, kwasm-operator, spinkube-operator-runtime-class

overrides:

spinkube-operator:

version: <string> # version of the spinkube-operator-shim-executor are same for shim-execuator, runtime-class, shim-executor-crd, spinkube-operator

helmOperatorChartOverridings: <map[string]any> # helm chart overridings, spinkube-operator

spinkube-operator-shim-executor:

version: <string> # version of the spinkube-operator-shim-executor are same for shim-execuator, runtime-class, shim-executor-crd, spinkube-operator

spinkube-operator-runtime-class:

version: <string> # version of the spinkube-operator-shim-executor are same for shim-execuator, runtime-class, shim-executor-crd, spinkube-operator

spinkube-operator-crd:

version: <string> # version of the spinkube-operator-shim-executor are same for shim-execuator, runtime-class, shim-executor-crd, spinkube-operator

cert-manager:

version: <string>

certmanagerChartOverridings: <map[string]any> # helm chart overridings, cert-manager

kwasm-operator:

version: <string>

kwasmOperatorChartOverridings: <map[string]any> # helm chart overridings, kwasm/kwasm-operator

Wasm Kwasm-plus

How to use it (Basic Usage)

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: kwasm

spec:

stackName: "wasm/kwasm-plus"

Demo app(wasmedge)

---

apiVersion: v1

kind: Pod

metadata:

name: "myapp"

namespace: default

labels:

app: nice

spec:

runtimeClassName: wasmedge

containers:

- name: myapp

image: "docker.io/cr7258/wasm-demo-app:v1"

ports:

- containerPort: 8080

name: http

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

name: nice

spec:

selector:

app: nice

type: ClusterIP

ports:

- name: nice

protocol: TCP

port: 8080

targetPort: 8080

Demo app(wasmtime)

apiVersion: batch/v1

kind: Job

metadata:

name: nice

namespace: default

labels:

app: nice

spec:

template:

metadata:

name: nice

labels:

app: nice

spec:

runtimeClassName: wasmtime

containers:

- name: nice

image: "meteatamel/hello-wasm:0.1"

restartPolicy: OnFailure

#### For wasmedge

# once up and running

kubectl port-forward svc/nice 8080:8080

# then you can curl the service

curl localhost:8080

#### For wasmtime

# just check the logs

Overrides available

apiVersion: app.ksctl.com/v1

kind: Stack

metadata:

labels:

app.kubernetes.io/name: ka

name: kwasm

spec:

stackName: "wasm/kwasm-plus"

disableComponents: <list[str]> # list of components to disable accepeted values are kwasm-operator

overrides:

kwasm-operator:

version: <string>

kwasmOperatorChartOverridings: <map[string]any> # helm chart overridings, kwasm/kwasm-operator

12 - Kubernetes Distributions

K3s and Kubeadm only work for self-managed clusters

12.1 - K3s

K3s for self-managed Cluster on supported cloud providers

Overview

K3s is a lightweight, certified Kubernetes distribution designed for resource-constrained environments. In ksctl, K3s is used for creating self-managed Kubernetes clusters with the following components:

- Control plane (k3s server) - Manages the Kubernetes API server, scheduler, and controller manager

- Worker plane (k3s agent) - Runs your workloads

- Datastore (external etcd) - Manages cluster state

- Load balancer - Distributes API server traffic in HA deployments

Architecture

ksctl deploys K3s in a high-availability configuration with:

- Multiple control plane nodes for redundancy

- External etcd cluster for reliable data storage

- Load balancer for API server access

- Worker nodes for running applications

CNI Options

K3s in ksctl supports the following Container Network Interface (CNI) options:

- Flannel (default) - Simple overlay network provided by K3s

- None - Disables the built-in CNI, allowing for installation of external CNIs like Cilium

Default CNI

The default CNI for K3s in ksctl is Flannel.To use an external CNI, select “none” during cluster creation. This will:

- Disable the built-in Flannel CNI

- Set

--flannel-backend=noneand--disable-network-policyflags - Allow you to install your preferred CNI solution

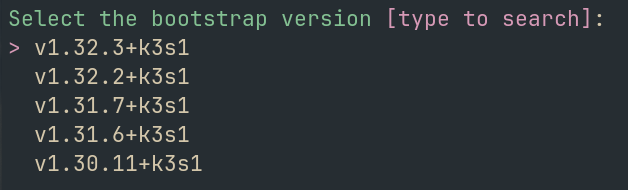

Version Selection

ksctl allows you to choose specific K3s versions for your cluster. The tool validates versions against available releases from the K3s GitHub repository.

If no version is specified, ksctl will automatically use the latest stable K3s release.

Control Plane Configuration

The K3s control plane in ksctl is deployed with the following optimizations:

- Node taints to prevent workloads from running on control plane nodes

- TLS configuration with certificates for secure communication

- Integration with the external etcd datastore

- Configuration for high-availability when multiple control plane nodes are deployed

Advanced Features

K3s in ksctl includes several advanced features:

- HA Setup: Multiple control plane nodes with an external etcd datastore

- Kubeconfig Management: Automatic configuration of kubeconfig with the correct cluster endpoints

- Certificate Management: Secure TLS setup with certificate generation

- Seamless Scaling: Easy addition of worker nodes to the cluster

Limitations

- K3s in ksctl is designed for self-managed clusters only

- Some K3s-specific features may require additional manual configuration

Next Steps

After deploying a K3s cluster with ksctl, you can:

- Access your cluster using the generated kubeconfig

- Install additional components using Kubernetes package managers

- Deploy your applications using kubectl, Helm, or other tools

- Scale your cluster by adding more worker nodes as needed

12.2 - Kubeadm

Kubeadm for HA Cluster on supported provider

Kubeadm support is added with etcd as datastore

Info

Here the Default CNI is noneOverview

Kubeadm is a tool built to provide best-practice “fast paths” for creating Kubernetes clusters. ksctl integrates Kubeadm to provision highly-available Kubernetes clusters across your infrastructure.

Features

- External etcd topology: Uses a dedicated etcd cluster for improved reliability

- HA control plane: Supports multiple control plane nodes for high availability

- Seamless cluster expansion: Easily add worker nodes

- Automatic certificate management: Handles all required PKI setup

- Secure token handling: Manages bootstrap tokens with automatic renewal

Architecture

ksctl with Kubeadm creates a cluster with the following components:

- External etcd cluster: Dedicated nodes for the etcd database

- Control plane nodes: Running the Kubernetes control plane components

- Worker nodes: For running your workloads

- Load balancer: Provides a single entry point to the control plane

┌────────────────┐ ┌────────────────┐ ┌────────────────┐

│ │ │ │ │ │

│ Control Plane │ │ Control Plane │ │ Control Plane │

│ │ │ │ │ │

└────────────────┘ └────────────────┘ └────────────────┘

│ │ │

└─────────────┬───────┴─────────────┬───────┘

│ │

▼ ▼

┌─────────────────────┐ ┌─────────────────┐

│ │ │ │

│ Load Balancer │ │ External etcd │

│ │ │ │

└─────────────────────┘ └─────────────────┘

│

┌─────────────┴───────────┐

│ │

▼ ▼

┌────────────────┐ ┌────────────────┐

│ │ │ │

│ Worker Node │ ... │ Worker Node │

│ │ │ │

└────────────────┘ └────────────────┘

Technical Details

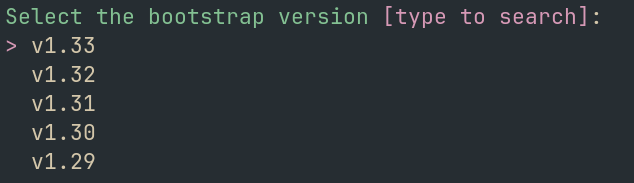

Kubernetes Versions

ksctl supports multiple Kubernetes versions through Kubeadm. The system automatically fetches available versions from the Kubernetes releases, allowing you to choose your preferred version.

Default Version

If you don’t specify a version, ksctl will use the latest stable Kubernetes version available.

Container Runtime

The default container runtime used is containerd, which is set up and configured automatically during cluster deployment.

Network Configuration

Kubeadm in ksctl configures the following networking defaults:

- Pod subnet:

10.244.0.0/16 - Service subnet:

10.96.0.0/12 - DNS domain:

cluster.local

CNI (Container Network Interface)

CNI Configuration

By default, Kubeadm in ksctl usesnone as the CNI. You can deploy your preferred CNI solution using ksctl’s addon system after cluster creation.Bootstrap Process

The bootstrap process follows these steps:

Control plane initialization:

- Generates bootstrap tokens and certificate keys

- Configures the first control plane node

- Sets up external etcd connection

- Initializes the Kubernetes API server

Additional control plane nodes:

- Join additional control plane nodes to form an HA cluster

- Share certificates securely between nodes

Worker node setup:

- Install required components (containerd, kubelet, etc.)

- Join worker nodes to the cluster using bootstrap tokens

Cluster Access

Once your cluster is created, ksctl automatically configures and saves your kubeconfig file with appropriate context names based on your cluster details.

Limitations

- All nodes must run Ubuntu-based Linux distributions

13 - Roadmap

Current Status on Supported Providers and Next Features

Supported Providers

flowchart LR;

classDef green color:#022e1f,fill:#00f500;

classDef red color:#022e1f,fill:#f11111;

classDef white color:#022e1f,fill:#fff;

classDef black color:#fff,fill:#000;

classDef blue color:#fff,fill:#00f;

XX[ksctl]--CloudFactory-->web{Cloud Providers};

XX[ksctl]--DistroFactory-->web2{Distributions};

XX[ksctl]--StorageFactory-->web3{State Storage};

web--Civo-->civo{Types};

civo:::green--managed-->civom[Create & Delete]:::green;

civo--HA-->civoha[Create & Delete]:::green;

web--Local-Kind-->local{Types};

local:::green--managed-->localm[Create & Delete]:::green;

local--HA-->localha[Create & Delete]:::black;

web--AWS-->aws{Types};

aws:::green--managed-->awsm[Create & Delete]:::green;

aws--HA-->awsha[Create & Delete]:::green;

web--Azure-->az{Types};

az:::green--managed-->azsm[Create & Delete]:::green;

az--HA-->azha[Create & Delete]:::green;

web2--K3S-->k3s{Types};

k3s:::green--HA-->k3ha[Create & Delete]:::green;

web2--Kubeadm-->kubeadm{Types};

kubeadm:::green--HA-->kubeadmha[Create & Delete]:::green;

web3--Local-Store-->slocal{Local}:::green;

web3--Remote-Store-->rlocal{Remote}:::green;

rlocal--Provider-->mongo[MongoDB]:::green;Next Features

All the below features will be moved to the Project Board and will be tracked there.

- Talos as the next Bootstrap provider

- Green software which can help you save energy and also better somehow

- WASM first class support feature

- ML features unikernels and better ML workload scalability

- Production stack for monitoring, security, to application specific application integrations like vault, kafka, etc.

- Health checks of various k8s cluster

- Role Based Access Control for any cluster

- Ability import any existing cluster and also to respect the existing state and not overwrite it with the new state from ksctl but to be able to manage only the resources which the tool has access

- add initial production ready for cert manager + ingress controller (nginx) + gateway api

- add initial production ready for monitoring (prometheus + grafana) tracing (jaeger) Opentelemtery support

- add initial production ready for Networking (cilium)

- add initial production ready for service mesh (istio)

- add support for Kubernetes migration like moving from one cloud provider to another

- add support Kubernetes Backup

- open telemetry support will lead to better observability by combining logs, metrics, and traces in one place and some amazing tools we can use to make the detection amazing with Alerting, suggestions, … from errors to suggestions based on some patterns

14 - Search Results

15 - Storage

storage providers

15.1 - External Storage

External MongoDB as a Storage provider

Data to store and filtering it performs

- first it gets the cluster data / credentials data based on this filters

cluster_name(for cluster)region(for cluster)cloud_provider(for cluster & credentials)cluster_type(for cluster)- also when the state of the cluster has recieved the stable desired state mark the IsCompleted flag in the specific cloud_provider struct to indicate its done

- make sure the above things are specified before writing in the storage

How to use it

- you need to call the Init function to get the storage make sure you have the interface type variable as the caller

- before performing any operations you must call the Connect().

- for using methods: Read(), Write(), Delete() make sure you have called the Setup()

- for calling ReadCredentials(), WriteCredentials() you can use it directly just need to specify the cloud provider you want to write

- for calling GetOneOrMoreClusters() you need simply specify the filter

- for calling AlreadyCreated() you just have to specify the func args

- Don’t forget to call the storage.Kill() when you want to stop the complte execution. it guarantees that it will wait till all the pending operations on the storage are completed

- Custom Storage Directory you would need to specify the env var

KSCTL_CUSTOM_DIR_ENABLEDthe value must be directory names wit space separated - You need to pass the secrets in the context.

Hint: mongodb://${username}:${password}@${domain}:${port} or mongo+atlas mongodb+srv://${username}:${password}@${domain}

Things to look for

make sure when you recieve return data from Read(). copy the address value to the storage pointer variable and not the address!

When any credentials are written, it will be stored in

- Database:

ksctl-{userid}-db - Collection:

{cloud_provider} - Document/Record:

raw bson datawith above specified data and filter fields

- Database:

When you do Switch aka getKubeconfig it fetches the kubeconfig from the point 3 and returns the kubeconfig data

15.2 - Local Storage

Local as a Storage Provider

Refer: internal/storage/local

Data to store and filtering it performs

- first it gets the cluster data / credentials data based on this filters

cluster_name(for cluster)region(for cluster)cloud_provider(for cluster & credentials)cluster_type(for cluster)- also when the state of the cluster has recieved the stable desired state mark the IsCompleted flag in the specific cloud_provider struct to indicate its done

- make sure the above things are specified before writing in the storage

it is stored something like this

it will use almost the same construct.

* ClusterInfos => $USER_HOME/.ksctl/state/

|-- {cloud_provider}

|-- {cluster_type} aka (ha, managed)

|-- "{cluster_name} {region}"

|-- state.json

* CredentialInfo => $USER_HOME/.ksctl/credentials/{cloud_provider}.json

How to use it

- you need to call the Init function to get the storage make sure you have the interface type variable as the caller

- before performing any operations you must call the Connect().

- for using methods: Read(), Write(), Delete() make sure you have called the Setup()

- for calling ReadCredentials(), WriteCredentials() you can use it directly just need to specify the cloud provider you want to write

- for calling GetOneOrMoreClusters() you need simply specify the filter

- for calling AlreadyCreated() you just have to specify the func args

- Don’t forget to call the storage.Kill() when you want to stop the complte execution. it guarantees that it will wait till all the pending operations on the storage are completed

- Custom Storage Directory you would need to specify the env var

KSCTL_CUSTOM_DIR_ENABLEDthe value must be directory names wit space separated - it creates the configuration directories on your behalf

Things to look for

- make sure when you receive return data from Read(). copy the address value to the storage pointer variable and not the address!

- When any credentials are written, it will be stored in

<some_dir>/.ksctl/credentials/{cloud_provider}.json - When any clusterState is written, it gets stored in

<some_dir>/.ksctl/state/{cloud_provider}/{cluster_type}/{cluster_name} {region}/state.json - When you do Switch aka getKubeconfig it fetches the kubeconfig from the point 3 and returns the kubeconfig data