K3s and Kubeadm only work for self-managed clusters

This is the multi-page printable view of this section. Click here to print.

Kubernetes Distributions

1 - K3s

K3s for self-managed Cluster on supported cloud providers

Overview

K3s is a lightweight, certified Kubernetes distribution designed for resource-constrained environments. In ksctl, K3s is used for creating self-managed Kubernetes clusters with the following components:

- Control plane (k3s server) - Manages the Kubernetes API server, scheduler, and controller manager

- Worker plane (k3s agent) - Runs your workloads

- Datastore (external etcd) - Manages cluster state

- Load balancer - Distributes API server traffic in HA deployments

Architecture

ksctl deploys K3s in a high-availability configuration with:

- Multiple control plane nodes for redundancy

- External etcd cluster for reliable data storage

- Load balancer for API server access

- Worker nodes for running applications

CNI Options

K3s in ksctl supports the following Container Network Interface (CNI) options:

- Flannel (default) - Simple overlay network provided by K3s

- None - Disables the built-in CNI, allowing for installation of external CNIs like Cilium

Default CNI

The default CNI for K3s in ksctl is Flannel.To use an external CNI, select “none” during cluster creation. This will:

- Disable the built-in Flannel CNI

- Set

--flannel-backend=noneand--disable-network-policyflags - Allow you to install your preferred CNI solution

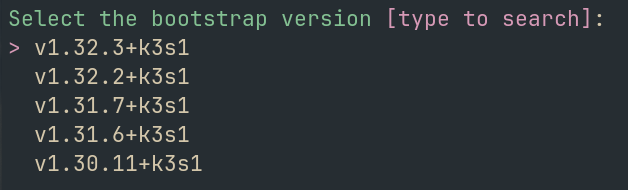

Version Selection

ksctl allows you to choose specific K3s versions for your cluster. The tool validates versions against available releases from the K3s GitHub repository.

If no version is specified, ksctl will automatically use the latest stable K3s release.

Control Plane Configuration

The K3s control plane in ksctl is deployed with the following optimizations:

- Node taints to prevent workloads from running on control plane nodes

- TLS configuration with certificates for secure communication

- Integration with the external etcd datastore

- Configuration for high-availability when multiple control plane nodes are deployed

Advanced Features

K3s in ksctl includes several advanced features:

- HA Setup: Multiple control plane nodes with an external etcd datastore

- Kubeconfig Management: Automatic configuration of kubeconfig with the correct cluster endpoints

- Certificate Management: Secure TLS setup with certificate generation

- Seamless Scaling: Easy addition of worker nodes to the cluster

Limitations

- K3s in ksctl is designed for self-managed clusters only

- Some K3s-specific features may require additional manual configuration

Next Steps

After deploying a K3s cluster with ksctl, you can:

- Access your cluster using the generated kubeconfig

- Install additional components using Kubernetes package managers

- Deploy your applications using kubectl, Helm, or other tools

- Scale your cluster by adding more worker nodes as needed

2 - Kubeadm

Kubeadm for HA Cluster on supported provider

Kubeadm support is added with etcd as datastore

Info

Here the Default CNI is noneOverview

Kubeadm is a tool built to provide best-practice “fast paths” for creating Kubernetes clusters. ksctl integrates Kubeadm to provision highly-available Kubernetes clusters across your infrastructure.

Features

- External etcd topology: Uses a dedicated etcd cluster for improved reliability

- HA control plane: Supports multiple control plane nodes for high availability

- Seamless cluster expansion: Easily add worker nodes

- Automatic certificate management: Handles all required PKI setup

- Secure token handling: Manages bootstrap tokens with automatic renewal

Architecture

ksctl with Kubeadm creates a cluster with the following components:

- External etcd cluster: Dedicated nodes for the etcd database

- Control plane nodes: Running the Kubernetes control plane components

- Worker nodes: For running your workloads

- Load balancer: Provides a single entry point to the control plane

┌────────────────┐ ┌────────────────┐ ┌────────────────┐

│ │ │ │ │ │

│ Control Plane │ │ Control Plane │ │ Control Plane │

│ │ │ │ │ │

└────────────────┘ └────────────────┘ └────────────────┘

│ │ │

└─────────────┬───────┴─────────────┬───────┘

│ │

▼ ▼

┌─────────────────────┐ ┌─────────────────┐

│ │ │ │

│ Load Balancer │ │ External etcd │

│ │ │ │

└─────────────────────┘ └─────────────────┘

│

┌─────────────┴───────────┐

│ │

▼ ▼

┌────────────────┐ ┌────────────────┐

│ │ │ │

│ Worker Node │ ... │ Worker Node │

│ │ │ │

└────────────────┘ └────────────────┘

Technical Details

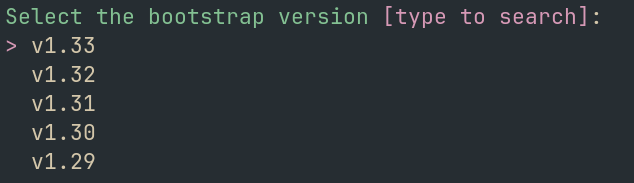

Kubernetes Versions

ksctl supports multiple Kubernetes versions through Kubeadm. The system automatically fetches available versions from the Kubernetes releases, allowing you to choose your preferred version.

Default Version

If you don’t specify a version, ksctl will use the latest stable Kubernetes version available.

Container Runtime

The default container runtime used is containerd, which is set up and configured automatically during cluster deployment.

Network Configuration

Kubeadm in ksctl configures the following networking defaults:

- Pod subnet:

10.244.0.0/16 - Service subnet:

10.96.0.0/12 - DNS domain:

cluster.local

CNI (Container Network Interface)

CNI Configuration

By default, Kubeadm in ksctl usesnone as the CNI. You can deploy your preferred CNI solution using ksctl’s addon system after cluster creation.Bootstrap Process

The bootstrap process follows these steps:

Control plane initialization:

- Generates bootstrap tokens and certificate keys

- Configures the first control plane node

- Sets up external etcd connection

- Initializes the Kubernetes API server

Additional control plane nodes:

- Join additional control plane nodes to form an HA cluster

- Share certificates securely between nodes

Worker node setup:

- Install required components (containerd, kubelet, etc.)

- Join worker nodes to the cluster using bootstrap tokens

Cluster Access

Once your cluster is created, ksctl automatically configures and saves your kubeconfig file with appropriate context names based on your cluster details.

Limitations

- All nodes must run Ubuntu-based Linux distributions